Getting the most out of Vary with Fastly

One of the most popular articles on the Fastly blog is my colleague Rogier “Doc” Mulhuijzen’s “Best practices for using the Vary header.” It was published in 2014, and since then some Fastly customers (including my former employers at the Financial Times and Nikkei) have started using Vary for everything from A/B testing to internationalization, in increasingly creative ways. But at the same time many people still use Vary badly or misunderstand what it does.

This post aims to provide an expanded guide, including some of the more exotic ways you can get value out of Vary in intermediate caches like Fastly.

How Vary works: a recap

A Vary response header tells caches that a particular header (or headers) from the request should be used to construct a secondary cache key that must match in order to use the cached response to answer subsequent requests. If that sounds like alien nonsense to you, you’re not alone. The best way to understand it is by looking at an example.

A common case is Vary: Accept-Language, which allows a server to produce versions of the same webpage in different languages and serve them to browsers based on their local language settings. Imagine we receive this request:

GET /path/to/page HTTP/1.1

Host: example.com

When this request arrives at Fastly, we will use the vcl_hash function in VCL to determine what the cache key for that object is. By default, this will be a combination of the URL host and full path (including the querystring, if there is one):

cache-key = req.host + req.url

= "example.com/path/to/path"

We then try to find an object in the cache that matches this key. This fails, because we don’t have that object in cache, so we send a request to your origin server. Your application sees that the request does not include an Accept-Language header, so you use your site’s default language. You’d obviously prefer to serve the right language if you can, so you don’t want us to serve this response as a cache hit if a future user requests a specific language. To avoid this you include Vary: Accept-Language in your response headers, even though none was specified on the request.

Back at Fastly, we receive your origin response, notice your Vary header and copy the value of Accept-Language from the original request into a “vary key” for your response. Since there wasn’t an accept language on the request, the vary-key for this cache object will be an empty string.

Cache-key | "example.com/path/to/something" |

Vary-key | "" |

Response object | |

Now Fastly receives a second request, for the same URL:

GET /path/to/page HTTP/1.1

Host: example.com

Accept-Language: ja-jp,en;q=0.8

The vcl_hash function produces the same cache key as before. It’s a hit! But wait, the object we found in the cache also has a vary-key, so we look at the value of the Vary header, and use it to construct a vary-key from the request, which in this case is:

Cache hit’s Vary header | “Accept-Language” |

| Request’s ‘Accept-Language’ value | “ja-jp,en;q=0.8” |

| Cache hit’s vary-key | “” |

Although the response we have in cache matches the cache key generated for the incoming request, it doesn’t match the vary-key (because “” != “ja-jp,en;q=0.8”). So this is actually a miss; we’ll send this request to your origin server.

This time, your origin server sees the Accept-Language header, generates a response in Japanese, and serves that response out to Fastly. Again you ensure you include a Vary: Accept-Language header. When that response arrives at Fastly, we see the Vary header and store a new cache object:

Cache-key | "example.com/path/to/something" |

Vary-key | "ja-jp,en;q=0.8" |

Response object | |

Now we have two cache objects, both matching the same cache key, but with different vary-keys (although both cached responses have the same Vary value, the inbound requests that triggered them had different values in the relevant header)

When a third request arrives with another new Accept-Language, (maybe “es-es” for Spanish) we will calculate the cache key using vcl_hash as normal, and we’ll find it matches two existing cache objects. We’ll then calculate a vary-key from the new request separately for each cache hit, just in case their Vary headers refer to different request headers:

| Object 1 | Object 2 | |

| Vary header on cache object | “Accept-Language” | “Accept-Language” |

| Computed vary-key for active request | “es-es” | “es-es” |

| Value of the cache object’s vary-key | “” | “ja-jp,en;q=0.8” |

| Match? | No | No |

Since the key has to be recomputed for each cache object, this is much less efficient than using the vcl_hash function, which is why we limit each cache object to 70 variations. In practice, all the cached objects for the same URL should in most cases have the same Vary header (as they do above), and later we’ll come to what happens when they don’t.

Important: one of the reasons that people find Vary hard to understand is that the value of a Vary header is not a key, it’s an algorithm for generating a key. While the key itself will differ for each variation, the method of generating it could be the same (but doesn’t have to be).

So, now that we understand the basics of Vary, here are some rules of thumb:

- If your origin server’s response depends on the value of a request header (including the absence of it), then include that header name in a

Varyresponse header. - Don’t vary on things that typically have many possible values, like

User-Agent, because it will create too many variations, you will have a very poor cache hit ratio, and you’ll quickly hit the 70 variation limit. - Use the same value of

Varyfor variations of the same URL.

Accept-Language is a great example of using Vary well, and it’s frustrating that too often developers choose to instead use geolocation as a proxy for language choice. Just because I’ve physically travelled to Japan doesn’t mean I suddenly speak Japanese (I really wish it did). My browser is telling you which languages I speak, so you should use that data, not my physical location. All browsers send this header, so there’s no reason not to use it.

Accept-Language geographic clustering

That said, there is obviously at least a strong correlation between your geographic location and your likely preferred language. We can see that effect if we look at the most popular Accept-Language values seen at some of Fastly’s point of presence (POP) locations:

| Washington DC | Frankfurt | Tokyo | |

|---|---|---|---|

| 1 | en-us | en-US,en;q=0.8 | ja-jp |

| 2 | en-US,en;q=0.8 | it-IT,it;q=0.8,en-US;q=0.6,en;q=0.4 | ja-JP,en-US;q=0.8 |

| 3 | en-US | en-us | ja-JP |

| 4 | en-US,en;q=0.5 | it-it | ja-JP,ja;q=0.8,en-US;q=0.6,en;q=0.4 |

| 5 | en | tr-tr | ja,en-US;q=0.8,en;q=0.6 |

| 6 | pt-BR,pt;q=0.8,en-US;q=0.6,en;q=0.4 | ru | ja |

| 7 | en\_US | tr-TR,tr;q=0.8,en-US;q=0.6,en;q=0.4 | ko-KR,ko;q=0.8,en-US;q=0.6,en;q=0.4 |

| 8 | es-ES,es;q=0.8 | pl-PL,pl;q=0.8,en-US;q=0.6,en;q=0.4 | ko-KR |

| 9 | en,\* | ru-RU,ru;q=0.8,en-US;q=0.6,en;q=0.4 | en-us |

| 10 | en-US;q=1 | de-de | ko-KR,en-US;q=0.8 |

Thanks to dense interconnects between European countries, Frankfurt serves a wide area of Europe and may be the optimum POP for users all over the continent. As a result, English still tops the list, with a variety of European languages making up the rest. In contrast, POPs in the US disproportionately receive requests for English, Spanish, and Portuguese, and POPs in Japan unsurprisingly are asked to speak Japanese.

Reducing vary granularity with language_lookup

The list above is messy — case differences, random combinations of different possible languages and priorities. The top six requested languages in Tokyo are all Japanese but just slightly differently phrased. Headers on which we vary often have a long tail of possible values, including garbage values, which will destroy cache performance.

In the earlier example above, we saw a vary-key of “ja-jp,en;q=0.8”, which includes not just the main language, but also the variant (Japanese as spoken in Japan), a second choice (in this case English, any variant), and a priority (0.8). This is all pretty specific to the user making the request, and other Japanese users might have slightly different settings. Added to this, our origin server might not actually support Japanese.

Fastly offers a VCL extension to help with this problem. Using the accept.language_lookup function in VCL (within vcl_recv), we can translate the user’s requested Accept-Language into a normalized language code that we know the origin server supports:

set req.http.Accept-Language =

accept.language_lookup("en:de:fr:pt:es:zh-CN", "en",

req.http.Accept-Language);

If an inbound request specifies Accept-Language: ja-jp,en;q=0.8, then the above code would transform that header into: Accept-Language: en, because the user is OK with English, and our backend server doesn’t support Japanese.

This is great, because now that the request has been redefined, the origin can respond with an English response and we will cache that with a vary-key of simply “en”.

Cache-key | "example.com/path/to/something" |

Vary-key | "en" |

Response object | |

Even for the case when an incoming request has no Accept-Language header, this normalization process will add an Accept-Language: en header, meaning that we’ll only cache one copy of the content for each language that we support.

Reducing granularity further with private headers

Using VCL, Fastly customers can add extra headers to a request before forwarding it to an origin server. Can the origin server Vary on those headers, even though they are not sent from the browser? Yes! But there are a couple of important considerations.

Sometimes, we can derive some important piece of data from the request in VCL, but rather than overriding the existing header, we create a new one. For example, say our homepage looks different depending on whether you are anonymous, logged in as a free user, or logged in as a paid user. Every request we receive has a cookie which identifies the user to us (or not, for anonymous users), but those values are unique to each user. For example:

Cookie: Auth=e3J0eXAiOxJKV1QiLTJhbGciOiJIUzI1NiJ9.eyJhdWQiOiJybmlra2VpX3dlYiIsImlzcyI6ImFwaWd3Lm44cy5qcCIsImRzX3Jhbmsi;

If the origin server were to vary on Cookie, then we’d have to store a separate variant for every user, which would be very bad for our cache performance. Instead, in VCL we could read the cookie header, decrypt it, derive some useful data about the user, and create some new headers. We’ll skip over how that transformation works, because that’s not the purpose of this post, but imagine that we end up adding headers such as:

Cookie: Auth=e3J0eXAiOxJKV1QiLTJhbGciOiJIUzI1NiJ9.eyJhdWQiOiJybmlra2VpX3dlYiIsImlzcyI6ImFwaWd3Lm44cy5qcCIsImRzX3Jhbmsi;

Fastly-UserID: 12345

Fastly-UserRole: free-user

Fastly-UserGroups: 53, 723, 111

If the origin server is generating a page that only varies based on a user’s role, then the response can pick out just that one header:

Vary: Fastly-UserRole

Cache-Control: max-age=31536000

However, unlike the Accept-Language transformation, which just slightly cleans up a header that the browser originally sent, this Vary rule acts on a private header, and is meaningless to the web browser. To avoid this being a problem, we need to transform the response in the Fastly vcl_deliver stage, before sending it back to the browser.

We can either change the Vary to reference the request header from which we derived the private data (in this case, Cookie), or disable downstream caching entirely and remove the Vary header. Varying on cookie is likely to be a death sentence for caching anyway (anyone using Google Analytics will be changing their cookies on every request), so we may as well keep it simple:

set resp.http.Cache-Control = "private, no-store";

If we don’t do this, the user could sign out and continue to see pages intended for logged-in users, because without a Fastly-UserRole header in the request, the browser will match a previous response for any role.

Reducing granularity with variable Vary values for the same URL

Earlier, I said that you should, as a general rule, return consistent Vary values for variations of the same URL. In most cases this makes sense, but it’s also wasteful in cases where you are varying on multiple headers, and where those headers interact in a predictable way. For example, imagine you have a homepage with a series of A/B tests in progress, which only apply if the user is signed in. In VCL, we can add some interesting request headers to an incoming request:

Fastly-UserRole: anon-user

Fastly-ABTestFlags: A

When this request is forwarded to the origin server, it generates a homepage for the anonymous user, and ignores the A/B test flags, because we don’t run A/B tests for anonymous users. However, because there’s the possibility that someone requesting this URL might be logged in, and therefore might need to vary on the test flags, we’ll vary on test flags for all variations of the homepage:

Vary: Fastly-UserRole, Fastly-ABTestFlags

In cache, this will effectively result in the following set of variants being stored by Fastly cache nodes:

Notice that while this Vary value has resulted in us correctly storing the different variations of the A/B test buckets, we’re also storing one copy of the anonymous-user homepage for each test bucket as well, even though all anonymous users get the same experience regardless.

There are a number of ways of fixing this. We could consolidate the headers into one header, and reduce the number of permutations, but I find a cleaner solution is to change the Vary response from the origin based on which headers were interrogated during the request:

REQUEST | RESPONSE |

REQUEST | RESPONSE |

I like to do this by writing a middleware in my NodeJS app that will expose a method like req.isLoggedIn(). Calling that method will read the “Fastly-UserRole” header and add it to the list of headers on which to vary in the response, ensuring that we don’t forget to vary when needed. Now we have a better cache granularity:

Almost anyone that knows anything about Vary will generally tell you not to do this, and it does depend on the ability of the cache to evaluate vary-keys in a deterministic way and to not implement any optimisations that assume all variations will use the same header set. I have not tested all caching proxies, so be warned (though I can clarify that this technique works perfectly well with Fastly).

Vary alternatives

Since Vary is a mechanism for distinguishing cache objects with the same URL, there are other ways we could do the same thing. The most obvious of these are to use distinct URLs behind Fastly, or to change the way we compute the cache key to include something other than the URL. The main difference between these techniques, and using Vary, is how each party in the pipe separates the variations.

| Browser | Fastly | Origin | |

|---|---|---|---|

Vary | Sees Vary | Sees Vary | Sets Vary |

| Modify URL in VCL | Sees Vary | Sees separate URLs; Sets Vary | Sees separate URLs No varying involved |

| Custom hash | Sees Vary | Sees same URLs as different cache objects; Sets Vary | Sees same URL; Might set Vary |

Modifying the URL

Changing the URL everywhere doesn’t work if we still have the basic Vary requirement of returning different content to two users loading the same URL, but we can treat the requests as distinct in Fastly and upstream of Fastly (in your origin server). For example, in vcl_recv, you can do something like:

if (req.url ~ "?") {

set req.url = req.url "&lang=" req.http.Accept-Language;

} else {

set req.url = req.url "?lang=" req.http.Accept-Language;

}

Doing this in vcl_recv means that each variation of Accept-Language in the request will result in a different URL being looked up in the cache and presented to your origin server. The only place where the variations occupy the same URL is now in the browser.

This technique works well, but has a few downsides:

- You still need to use

Varyon the response from Fastly to the browser, and because your origin server is probably not adding it (since it doesn’t apply to the origin response) you will need to add it invcl_deliverusing Custom VCL. - The URL manipulation will apply to every request, unless you write more complex VCL to limit it to only URLs that match a pattern, for example. This puts some of the separation logic in VCL and some in the backend, which is often a messy architecture.

- If you send a purge request to Fastly, you will purge only one of the URLs, since they have separate cache keys and Fastly doesn’t consider them variants. Normally, varied responses with the same cache key are all wiped with a single purge for that URL.

Modifying the cache key

In vcl_hash, you have the opportunity to modify the cache key algorithm. For example:

sub vcl_hash {

set req.hash += req.url;

set req.hash += req.http.host;

set req.hash += req.http.Accept-Language;

#FASTLY hash

return(hash);

}

This is similar to transforming the URL: since the URL is one of the components of the standard hash algorithm, transforming the URL is essentially modifying the hash too. The difference is that unlike the URL transform, modifying the hash algorithm directly means that the URL presented to the origin server remains unchanged.

I don’t like this, because it puts your origin server in a strange situation — it will use the Accept-Language header to generate responses for each language, and Fastly will cache them separately, but the origin need not advertise the variation with a Vary header, because if it does, Fastly will include the language in both the cache key and a vary-key too. There’s no significant performance downside to this but it feels to me like something that will come back and bite you later.

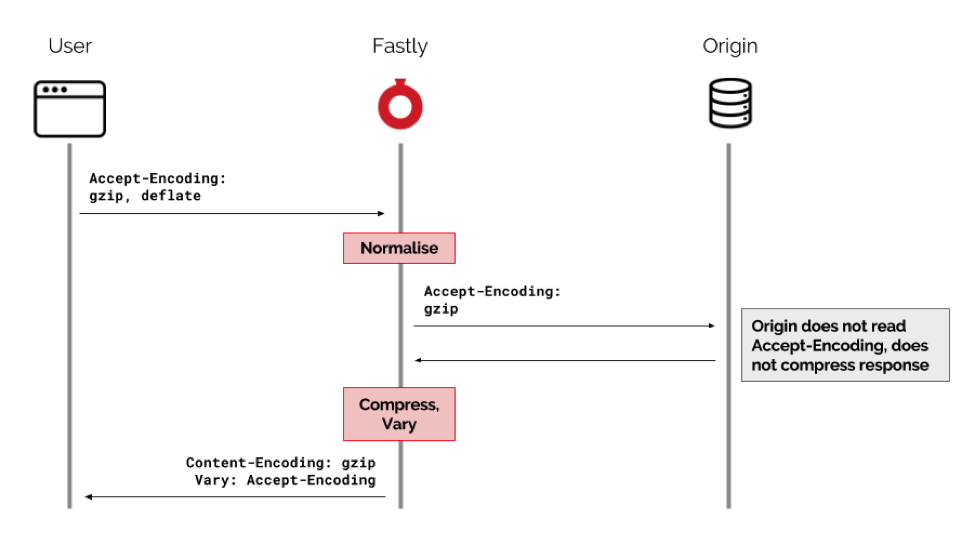

Normalizing Accept-Encoding and Edge GZip

Varying on Accept-Encoding is by far the most common use of Vary, so Fastly normalizes the Accept-Encoding value automatically. The value will be transformed into a single token which is currently either ”gzip” or an empty string for no compression support (if you’re wondering about Brotli compression, see this community post).

We can also perform gzip compression for you, if you opt to enable it, allowing you to ignore Accept-Encoding at the origin and deal with it exclusively at the edge:

We’ve covered already the benefits of normalizing request metadata before using Vary on it, and this takes that principle one stage further by normalizing to a value that can be understood as a transformation that can be done at the edge itself, so your origin server need not create any variations.

Conclusion

Good grief, you made it to the end. Here’s what we learned:

Varyallows distinct variations of the same content to be cached against the same URL, and chosen based on the value of some request header.- It’s most often used for

Accept-Encoding(gzip / no-gzip), and should probably be used more forAccept-Language. - Currently it’s not very useful to vary on other request headers from the browser, but transforming them at the CDN can produce additional useful values in private headers.

- You need to be very careful about which responses you include

Varyon, and why, and for the most part always use the sameVaryvalue for the same URL unless you really know what you’re doing.

Happy varying!