Deepfakes and disinformation: how new technical standards are creating a more trustworthy internet

The Coalition for Content Provenance and Authenticity (C2PA) develops technology to combat disinformation. The group has been working expeditiously behind closed doors for months, and recently released a public draft specification designed to make it easier to trace the origin and evolution of the media we all create and consume.

The draft represents a big idea that has been months in the making: that technology can combat misinformation by surfacing how media has been manipulated, so everyone can make smarter decisions about how we consume and share it. Getting early feedback is critical to ensuring this kind of technology is a positive force in fighting disinformation.

At Fastly, we’re committed to empowering our customers to build a more trustworthy internet. Along with other media and internet organizations, we contributed to the creation of this public draft specification to further this mission.

Below, I’ll give a brief tour of the problem, the role of C2PA tech, and then explain how you can get involved. Coincidentally, some tools are already popping up if you want to try this tech for yourself.

Some background on misinformation

Misinformation is inaccurate information, regardless of whether or not it is intended to deceive. But there are a few subtypes of it, and some evolving definitions. Disinformation is the subset of misinformation that is deliberately deceptive. “Fake news” is considered misinformation that mimics news media in form, but not in intent.

Measuring and limiting the harm caused by any of these types of misinformation is challenging. Oversight, collaborative platform anti-abuse operations, and law enforcement play important roles in addressing the issue, to name a few.

One effective tool for limiting the harm caused by misinformation is media literacy. In short, these are the practices that allow individuals to critically evaluate media. For example, identifying the authors of the media and the techniques that were used to construct it.

How C2PA helps

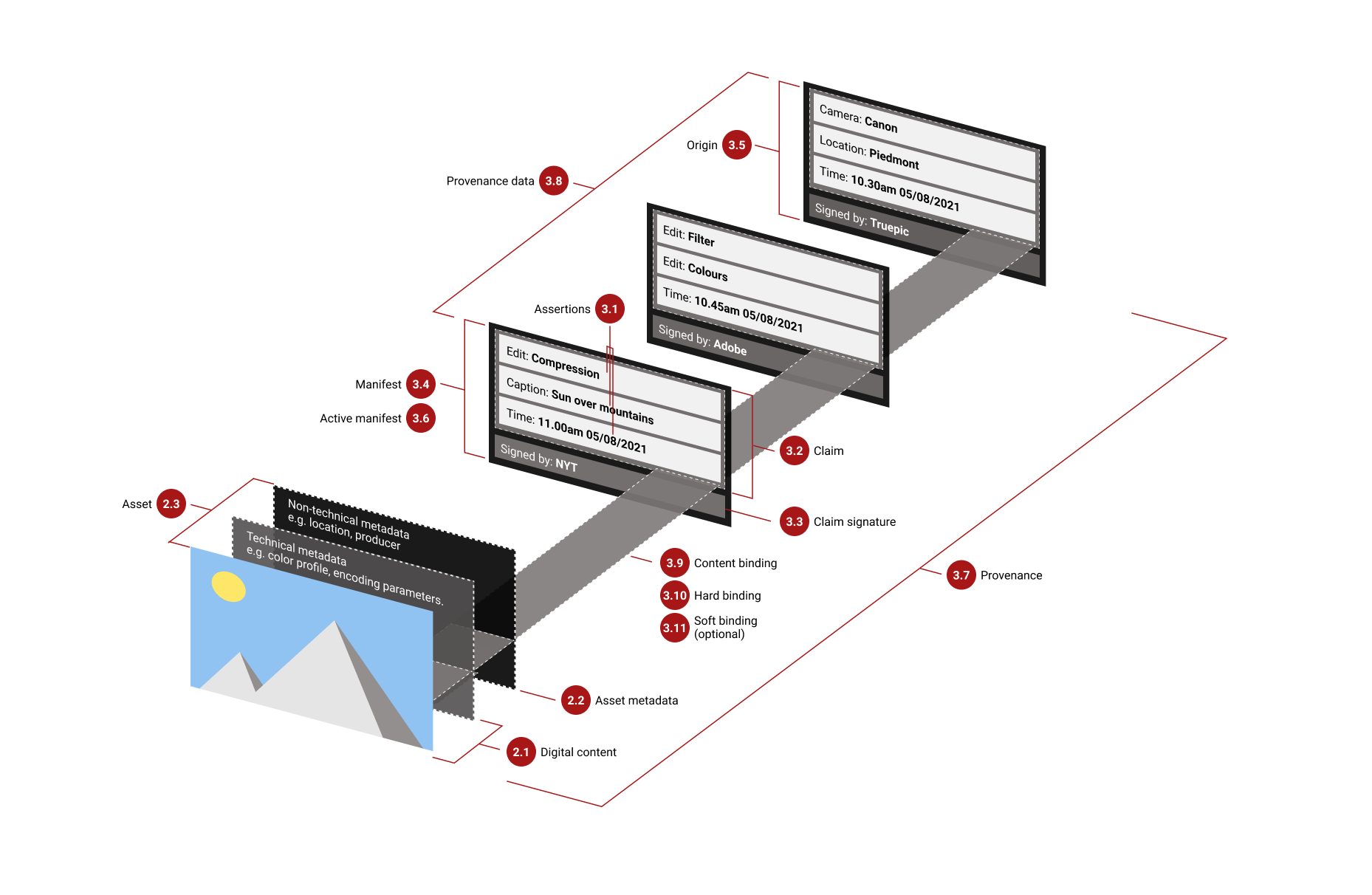

This is where technology like C2PA comes in. C2PA specs out an authenticated history of changes to a media asset. The idea is that media tools of all sorts — from your cell phone camera to a photo editor to your favorite edge compute platform — can then update and validate this history. When the asset is delivered to an end user, they can see (or hear or touch) the cryptographically bound history of the media they are consuming and use this information to reason about the media’s accuracy and legitimacy.

Elements of C2PA (from public draft v0.7)

C2PA-enabled tools capture and present information that is well-known to aid in media literacy decisions. Ultimately, C2PA aims to soundly, conveniently put this information into the hands of users at just the right time — like when you are making “rapid media literacy decisions” (aka deftly rolling through your Twitter feed at high speed). Imagine being able to see a full history of how a video was modified (and who did it) before deciding to retweet it.

An important RFC

As you might have guessed (or have already known), this is a tricky problem to work on.

There are technical issues. There are tractable ones, like helping provenance metadata interoperate with C2PA-unaware edit and delivery toolchains. And there are far-reaching ones, like supporting the myriad identity providers that could be used by C2PA adopters.

But ultimately C2PA is proposing a federated system that deals with real people and real harms. This can elevate typical system design concerns, like functionality and information security, but it also dictates special consideration for issues like privacy and harm minimization. For example, consider that a real name policy could be useful in reasoning about media, but it can also expose individuals to risks, including risk of physical harm. How should C2PA tech be designed to minimize negative impacts like these?

Getting diverse input into the design of systems like C2PA is critical to maximizing the benefit — and minimizing the harms — that they can bring to individuals who are affected by the proliferation of misinformation. In other words, pretty much everyone. Your input, both technical and non-technical, can help shape how this sort of technology works.

It’s up to us

Let’s face it: Misinformation might get worse before it gets better. Reports of the inadvertent spread of misinformation, advances in dual-use technology like deepfakes, and pointed disinformation campaigns continue to roll in. It can feel hopeless. But it isn’t. We, as technologists and critical thinkers, might be able to help. Content provenance technology isn’t a panacea, and it faces challenges, but it also has potential.

If you’re interested in helping address this problem, check out the C2PA specification and give us your thoughts.