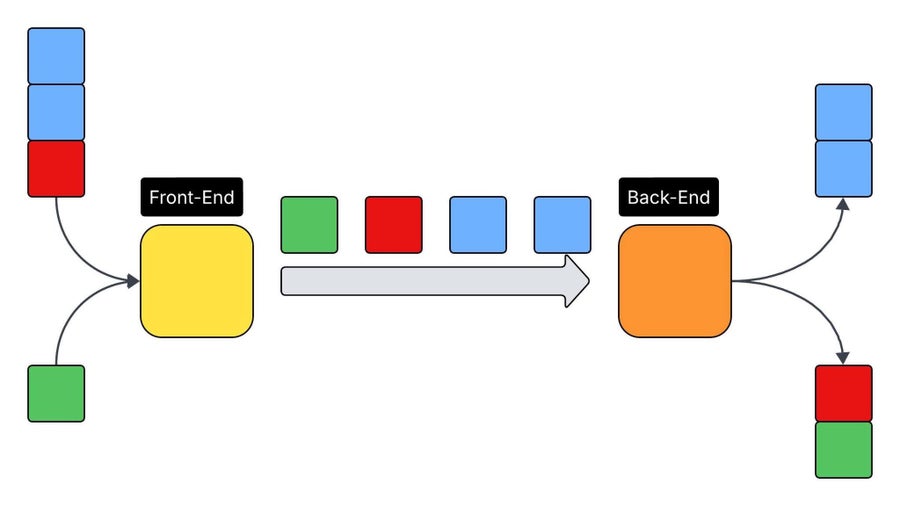

When web applications were simpler, there was no need to front back-end servers with reverse proxies. However, as user needs grew and system designers required performance and resilience, system design evolved such that modern web application system configurations now use chains of servers between clients and the application logic. Because this system design is often used, there is a growing threat of attacks designed to desynchronize the servers in the chain.

HTTP/1.1 can introduce confusion for services processing requests since it allows two different ways to specify the length of content within an HTTP Request message. This ambiguity is amplified when multiple servers are chained together in order to handle user requests. In addition, HTTP/1.1 allows for multiple HTTP Requests to be sent over the same network connection from one server to the next, making it extremely important that each of the servers in the chain agree on where one request ends and another begins. If there is a mismatch between how the front-end and back-end servers determine the beginning and ending of HTTP Requests, it is possible to smuggle requests to the back-end that were not intended to be processed.

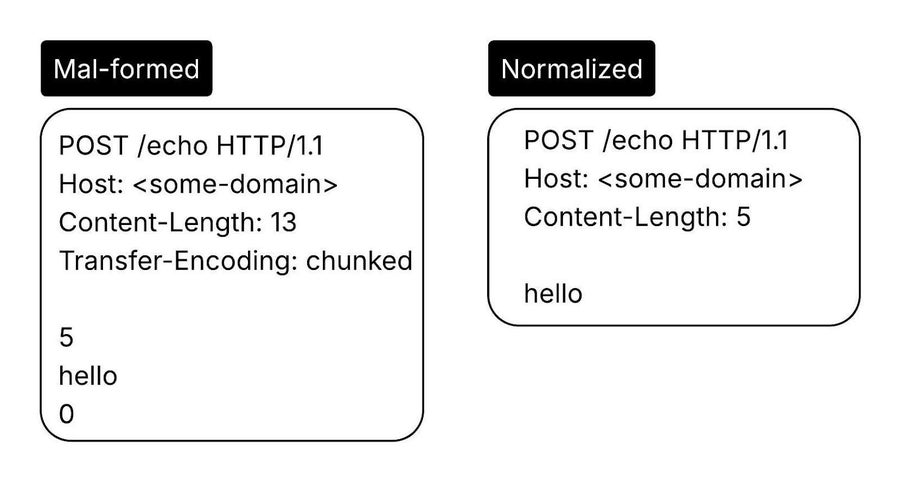

With that in mind, Fastly has been architected and built to be resistant to such attacks. The Fastly stack uses strict protocol parsing, adhering to standards. This removes the ambiguity that attackers use to exploit request smuggling vulnerabilities. Additionally, Fastly either rejects or normalizes ambiguous requests at the edge. For example, a request containing both a Content-Length and a Transfer-Encoding header will be normalized to prevent it from reaching customer origins in an exploitable state. Finally, Fastly denies GET and HEAD requests that contain a body. When this occurs, Fastly sends a 400 Bad Request response and drops the request, preventing it from reaching the origin. Only if specifically configured to permit it, PASS requests will transmit GET and HEAD request bodies to the origin.

In this blog post, we describe how this inconsistent parsing arises and its implications to application security. Next, we detail Fastly’s resilience to these attacks and what we do differently. Finally, we highlight Fastly’s posture against HTTP/1.1 desynchronization attacks and the key architectural principles that Fastly leverages to defend against such malicious actions.

HTTP Desynchronization Attacks

In many modern web applications, HTTP Request messages often flow through a chain of intermediaries before reaching the back-end server. This multi-layered approach offers performance and security benefits, but may also introduce critical vulnerabilities. One such vulnerability is an HTTP/1.1 desynchronization attack, also known as request smuggling. This class of attack exploits the potential discrepancies in how intermediate, such as front-end servers, and back-end servers interpret the boundaries between HTTP Requests. Inconsistent handling of these boundaries may allow an attacker to inject malicious requests and compromise the entire system.

The foundation of HTTP/1.1 desynchronization attacks lies in the protocol's ambiguity regarding the length of the request. The HTTP/1.1 specification allows for two headers to define the body of a request: Content-Length and Transfer-Encoding.

Content-Length: This header specifies the exact number of bytes in the request body.

Transfer-Encoding: chunked: This header indicates that the body is broken into a series of chunks, each with a specified size. The body is terminated by a chunk of size zero.

RFC 2616 specifies HTTP/1.1 and states that if both headers are present, Transfer-Encoding must take precedence and the Content-Length header must be ignored. However, different server implementations (front-end and back-end) may have slightly different interpretations of the HTTP/1.1 specification. These minor parsing discrepancies can become a major security flaw if those interpretations are not consistent between the front-end and back-end servers.

The core of the problem is that when a front-end and a back-end server interpret the request length differently, their communication becomes desynchronized. An attacker can send a single, carefully crafted HTTP Request that the front-end sees as one complete request, but the back-end sees as two requests. This leaves a potentially malicious request waiting on the back-end's connection, which will then be prepended to the next legitimate request, leading to a compromise. A compromise could allow a request to bypass security checks, poison a server’s cache, or provide access to internal resources.

HTTP/1.1 desynchronization attacks are built on the following key concepts:

Request Pipelining and Keep-Alive Connections: HTTP/1.1 allows for multiple requests to be sent over a single TCP connection. This performance-enhancing feature, while beneficial, is also a prerequisite for desynchronization attacks. It allows the smuggled request to remain on the connection and poison the next request.

Front-End and Back-End Discrepancies: The vulnerability exists because the front-end server and the back-end server have different ways of parsing requests. The front-end might honor the

Content-Lengthheader, while the back-end might prioritizeTransfer-Encoding.Smuggling a Request: The attacker crafts a request with both

Content-LengthandTransfer-Encodingheaders. For example, theContent-Lengthvalue is short, but theTransfer-Encodingheader contains a full, malicious second request. The front-end processes the request based on theContent-Lengthand forwards it to the back-end. The back-end, which honorsTransfer-Encoding, processes the first, legitimate part of the request, but then finds a second, malicious request in the body, which it then processes as a new request. This smuggled request is now waiting on the connection for the next user. A generalized view of such parsing inconsistencies is shown below.

Inconsistent Header Handling Facilitates Request Smuggling

Desynchronization attacks can be categorized based on how the front-end and back-end servers handle the conflicting headers. Fastly has published a detailed learning page on HTTP request smuggling, which includes tips and best practices for prevention. In brief, the most common classes are:

CL.TE: The front-end server uses the

Content-Lengthheader to determine the request boundary, while the back-end server honors theTransfer-Encodingheader. This is a classic and highly effective attack vector.TE.CL: This is the reverse of CL.TE. The front-end uses

Transfer-Encoding, but the back-end usesContent-Length. Attackers can use obfuscatedTransfer-Encodingheaders that the front-end ignores but the back-end honors, or vice-versa, to create the desynchronization.TE.TE: In this scenario, both servers honor the

Transfer-Encodingheader, but an attacker uses obfuscation techniques (e.g., non-standard whitespace, duplicate headers) to cause one of them to ignore it. The servers then disagree on the request boundary.0.CL: In this scenario, the front-end is tricked into not processing the

Content-Lengthheader. A front end confused in this way will not properly discard or drain the request body when it finishes processing the request. The request body will then remain in the pipeline, and the front-end will incorrectly process the body as if it were the next request. This desynchronisation can then potentially be leveraged to perform further attacks.

HTTP/1.1 desynchronization attacks represent a fundamental flaw in the HTTP/1.1 protocol. As long as different implementations of the protocol exist and are chained together in web application architectures, these vulnerabilities will persist. Organizations must enforce strict validation of HTTP headers, normalize requests before forwarding them, and ensure consistent header interpretation across all components of their web infrastructure to prevent these insidious and impactful attacks.

Fastly’s Resilience to Attacks

As curl developer Daniel Stenberg put it: HTTP is not simple. HTTP/1.1, being a text-based protocol, presents many challenges in consistent parsing and handling across different systems and services. When your service or website is using Fastly, we ensure that the user requests being processed at the edge are parsed, normalized, and handled in a consistent manner, removing any ambiguity that request smuggling attack vectors rely on. Doing this at the edge also ensures that back-ends receiving requests from Fastly won’t need to worry about any ambiguities either, as they are receiving the normalized request.

The Fastly edge proxy is powered by h2o, which handles all incoming edge HTTP Requests. The h2o server utilizes picohttpparser, a battle-tested, robust, and highly performant HTTP parsing library. This parsing library is focused on simplicity and standards adherence, allowing Fastly to be resilient against attacks on HTTP parsing. Attackers often attempt to bypass filters by using subtle obfuscations, such as extra whitespace or new-lines. Because picohttpparser encodes a strict, no-compromise interpretation of the HTTP standard, it rejects non-compliant syntax that less-strict parsers might accept, closing the door on a whole class of parsing differentials.

H2o’s responsibility at the edge is to handle and terminate all incoming connections to Fastly, including HTTP/1.1, HTTP/2 and HTTP/3. H2o checks for any ambiguities in the request (such as CL.TE combinations, or multi-line headers) and denies, with a 400 Bad Request, any request that does not conform to the strict format rules. This includes blocking HTTP GET and HEAD requests with bodies by default, a common methodology for request smuggling. Upon successful parsing, h2o will then normalize the request in order to relay it to a back-end handler for Delivery or Compute services. Doing this normalization first at the edge allows our own systems to handle the client requests in a consistent and secure manner all the way through to the origin.

Normalization of HTTP headers

Fastly takes extra precautions during connection reuse to origin servers as well, by ensuring the connection is fully drained (no unread data left on the socket) before sending the next request to the origin. This ensures that a smuggled request fragment, left behind by a desynchronization attack on the origin, is completely discarded instead of being mistakenly prepended to the next request or to the response. Fastly also ensures that any downgrades from the edge to the origin are handled in a standards-compliant and consistent manner. When creating a request to the origin server, Fastly will create a new request and apply a single, authoritative Content-Length header.

By ensuring users go through Fastly to reach your application, you’re inherently increasing the security of your application by taking advantage of our uniform protection at massive scale.

Fastly’s Security-First Approach to HTTP Desynchronization Threats

HTTP desynchronization attacks pose a significant threat to modern web applications by exploiting ambiguities in the HTTP/1.1 protocol. Fastly’s focus on security is a key aspect of offering reliable and safe services. Threats, such as desynchronization attacks, are a risk for content providers and content delivery networks. By leveraging strict parsing, normalization, and disciplined resource management, we are able to minimize the threat of desynchronization attacks on our customers and on our infrastructure.

We also recognize that the threat landscape is always evolving and everyone must remain vigilant for new attacks and respond accordingly. Fastly is constantly analyzing attacks in order to stay ahead of the threats to us and our customers. By using Fastly, organizations can leverage our uniform protection at a massive scale, inherently increasing the security of their applications. Our architectural principles and robust defensive mechanisms ensure that web applications are shielded from the insidious nature of HTTP desynchronization attacks. If you’re interested to learn more about how Fastly protects your applications from evolving threats, get in touch with one of our experts today.