「AI ソリューションは、アプリケーションセキュリティ、脆弱性管理、セキュリティオペレーションにおける手作業の負担を大幅に削減する機会を提供します。ワークフローの自動化には一定の進展が見られるものの、今日のセキュリティチームは依然として処理すべき量の多さに圧倒されています。調査によると、セキュリティリーダーはこの新しいテクノロジーのメリットを活かして問題を先取りし、セキュリティリスクを迅速に発見して修正しています。これからの課題は、AI 駆動型セキュリティシグナルの偽陽性率を下げ、これらのソリューションが AI シェルフウェアとならないようにワークフローに効果的に統合することです。」- Marshall Irwin、Fastly、CISO

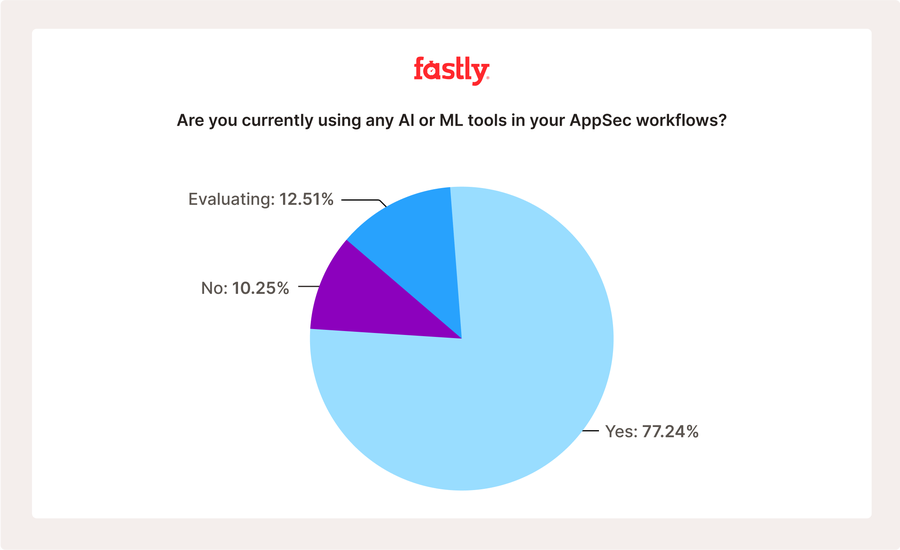

アプリケーションセキュリティ (AppSec) への人工知能 (AI) の急速な統合は、膨大な手作業の負担を軽減し、脆弱性の検知を加速させる画期的な技術として高く評価されています。攻撃対象領域の拡大、リソースの制限、そしてより多くのコードを迅速に (かつ安全に) 出荷しなければならないというプレッシャーがある中で、私たちは AI がそのギャップを埋めるのに役立つと仮説を立てました。実際、当社の最新の調査では、驚くべき傾向が明らかになっています。回答者の 90% がすでに自社の AppSec プログラム内で AI を活用しているか、積極的に検討しています。地域や業種を問わず、回答者の 77% がすでに AI を使用しており、13% が自社の AppSec プログラムとワークフロー内で少なくともある程度 AI を評価しています。

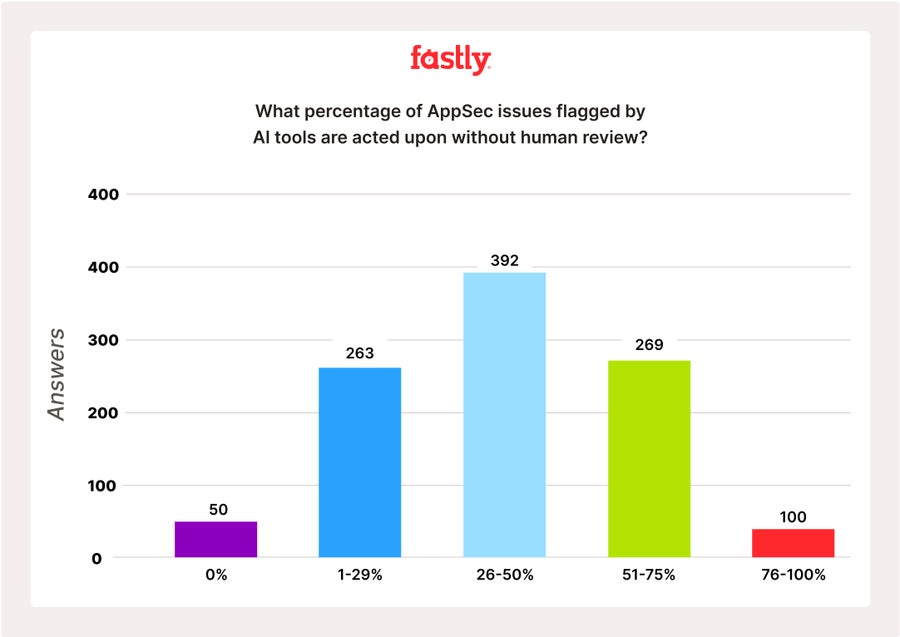

しかし、このような熱心な導入の裏には、重大かつおそらくは懸念すべき矛盾が潜んでいます。AI への依存度が高いにもかかわらず、回答者は AI の結果に対する監視がほとんど、あるいは全く行われていないと報告しています。回答者の3分の1は、ワークフロー内で AI ツールが特定した AppSec の問題の 50% 以上が、人間によるレビューなしで対応されていると報告しています。これは信頼の表れなのでしょうか、それともスピードを維持するためにチームが計算されたリスクを取っていることの兆候なのでしょうか?

AI 導入の傾向 : 世界および業界の詳細な分析

調査回答者全体の 77% が、既存の AppSec ワークフロー内ですでに AI を使用していると回答しており、ハイテク業界が最も多くなっています (88% が AppSec のユースケースで AI を使用)。SaaS (86%) とヘルスケア (82%) がそれに続き、M&E (73%) と公共部門 (64%) は AI の導入がわずかに遅れています。AI への依存度は南米が90% と最も高く、アジア (82%)、ヨーロッパ (80%) がやや遅れています。北米は 75% と少し遅れをとっています。これは、潜在的なリソースや人材の違いを示唆しているように思われます。北米では AI への依存度が低いのは、セキュリティとテクノロジー分野の人材プールが飽和状態にあるためではないでしょうか。

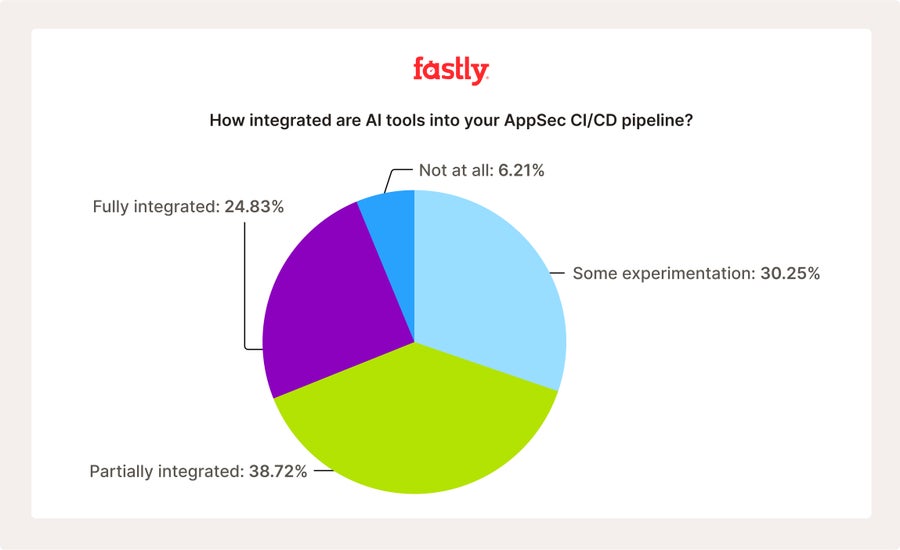

既存の CI/CD パイプラインへの統合と AI 駆動型セキュリティツールの導入範囲について尋ねたところ、調査回答者の 25% が AI が既存の開発パイプラインに完全に統合されていると回答しました。39% は部分的に統合されていると回答し、31% は実装を「実験中」であると回答、わずか 6% が現時点では既存のワークフローに「まったく統合されていない」と回答しました。前の質問で明らかになったのと同じ地域的傾向がここでも現れました。南米の回答者の 38% が、AI が既存のパイプラインに完全に統合されていると回答しました。この数字は北米ではわずか 25% でした。業界別に見ると、ハイテクは他の業界と比較して最も完全な統合 (40%) を報告しており、M&E では 19%が完全に統合、ゲームでは 15% が完全に統合と回答しています。

回答者にとっての AI のメリットは明らかです。55% が (明らかな) 手作業の削減を報告し、50% が「脆弱性の検知速度が向上」、36% が脆弱性の修復までの時間が短縮、43% がより優れたトリアージ機能を報告しています。しかし、これらのメリットは精度と真のセキュリティを犠牲にしているのでしょうか?

信頼性と正確性 : AppSec における AI の信頼性の評価

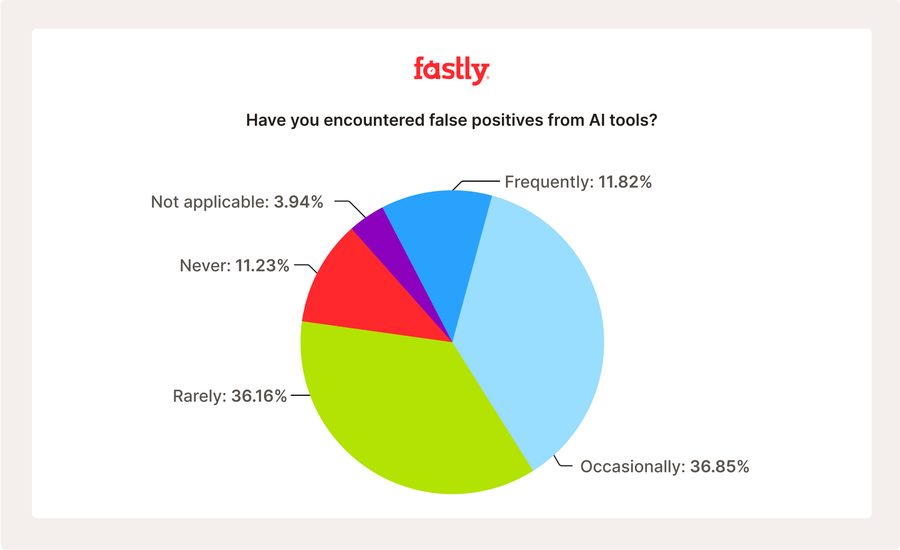

多数の導入と統合が行われている現状を踏まえ、AI の信頼性と信用度に関する回答者の感情をさらに理解したいと考え、AI 駆動型セキュリティツールに起因する偽陽性の発生頻度について、回答者に尋ねました。37% が時折偽陽性があると報告し、12% が頻繁に偽陽性があると報告しており、合計で調査回答者のほぼ半数 (49%) が少なくともある程度頻繁に偽陽性を経験していることが判明しました。この結果は、あらゆるセキュリティプログラムに重大な悪影響を及ぼす可能性があります。偽陽性を「一度も」見たことがないと回答したのはわずか 11% でした。こうなると、AI 駆動型のセキュリティツールが本当に「十分に良好な」セキュリティ結果を生み出しているのかどうかという疑問が生じます。

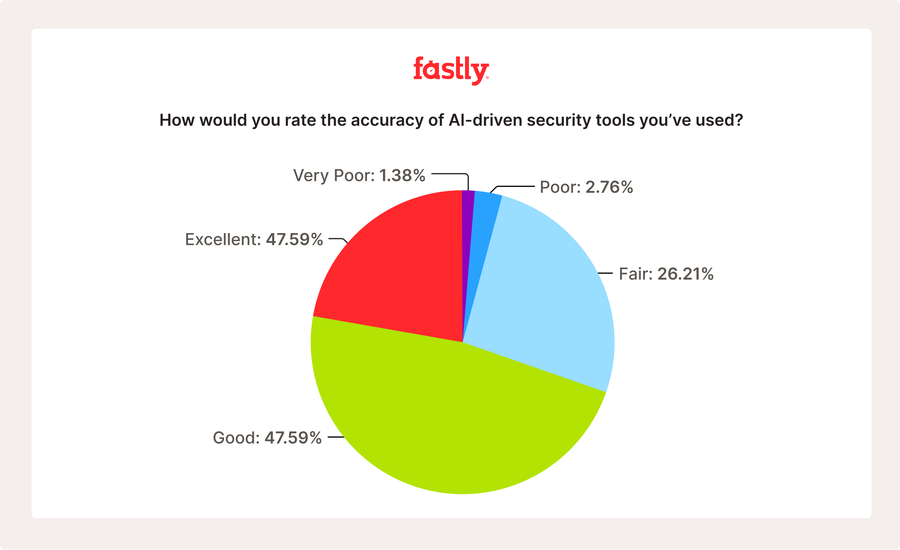

私たちはさらに、AI の精度に対する全体的な信頼性に疑問を投げかけました。「非常に良い」と評価したのはわずか 22% で、「まあまあ良い」と答えたのは 48%、そして合わせて 30% は「普通」もしくは「非常に悪い」と評価しました。私たちは、セキュリティチームがセキュリティワークフローで AI を使用する際に、どのような課題があるかをさらに調査したいと考えました。回答者は複数の選択肢から選択可能で、「統合の複雑さ」(46%)、「結果への信頼性の欠如」(36%)、「セキュリティ調査結果の説明不足」(23%)、「社内のスキル不足」(38%)、「規制やコンプライアンス上の懸念」(33%) がセキュリティチームの足かせとなっていると報告しました。

自由記述形式の回答では、回答者は「後でデバッグしなければならないことが多すぎる」こと、および「セキュリティワークフローでの AI の使用に関して倫理的およびコンプライアンス上の懸念がある」と回答しました。

重大なギャップ : 人間のレビューなしに対処される AppSec の問題

AI の導入/統合状況と、監督・信頼性・成果に関する回答を照らし合わせると、明確な傾向が見えてきます。すでに AI は業務に組み込まれ、スピード向上やリソース・スキル不足の補完に役立っていますが、決して完璧ではありません。偽陽性の発生や信頼性、全体の精度に対する評価のばらつきも見られることから、私たちは各組織がセキュリティ結果を検証するために、どのような仕組みやガードレールを設けているのかを探りました。

AI 主導のセキュリティツールを使用して見つかったエラーのうち、人間による確認なしに対処されたのは何パーセントかを回答者に尋ねました。回答者の 4% は、特定されたエラーの 76 - 100% は人間の介入なしに対処されていると報告しました。回答者の 26% は、特定されたエラーの 51 - 75% は人間の介入なしに対処されていると報告しました。さらに 26 % の回答者は、特定されたエラーの 1-25% は人間の介入なしに対処されていると報告しました。

つまり、回答者の3分の1が、ワークフローで AI 主導のツールによって特定されたアプリケーションセキュリティの問題の 50% 以上が、人間によるレビューを一切受けずに対処されていると報告しています。上記の AI の全体的な精度とパフォーマンスに関するさまざまな意見を踏まえると、ここでの監視不足は、限られたリソースと帯域に加え、AI が「十分である」と受け入れるほど高いリスク許容度が組み合わさった結果であると推測できます。

ある程度の AI 監視を実践している組織に対して、結果を検証するためにどのようなガバナンスコントロールを実施しているかを尋ねました。複数回答可の質問に対し、66% が確認のチェックポイント、49% が AI モデルの審査、46% が監査とログ記録、32% がセキュアなサンドボックス化を採用していると回答しました。ある程度の監視が行われることは期待できますが、これらの値も上記の回答と併せて検討する必要があります。ある程度の監視慣行は実施されていますが、それを実践している回答者の割合は懸念されるほど限られています。

AppSec における AI の未来:より多くの (より優れた) AI の可能性

将来を見据えて、AppSec ベースのユースケースで AI に何を求めるか、または何を求める必要があるかを回答者に尋ねました。AppSec の AI に期待する改善点や機能について質問したところ、32% が「わからない」または「今のところ問題ない」と回答しました。18% は「より正確で偽陽性が少ない」ことを望み、10% は全般的に AppSec 固有の機能の向上が必要だと回答し、合計 9% はコーディング中に自動化されたリアルタイムの脆弱性検知により、より速く結果を確認したいと回答しました。

自由回答の中で特に目立った意見としては、「AI がより透明性を持ち、脅威を検知した際には即座に、かつより高い精度で通知してほしい」という声がありました。また、「AI ツールが複雑なビジネスの背景をより理解し、脆弱性の優先度を的確につけられるようになってほしい」という意見や、「AI には、正当な活動と悪意のある行動を正確に見分け、その判断根拠を説明できる能力が必要だ」という指摘もありました。

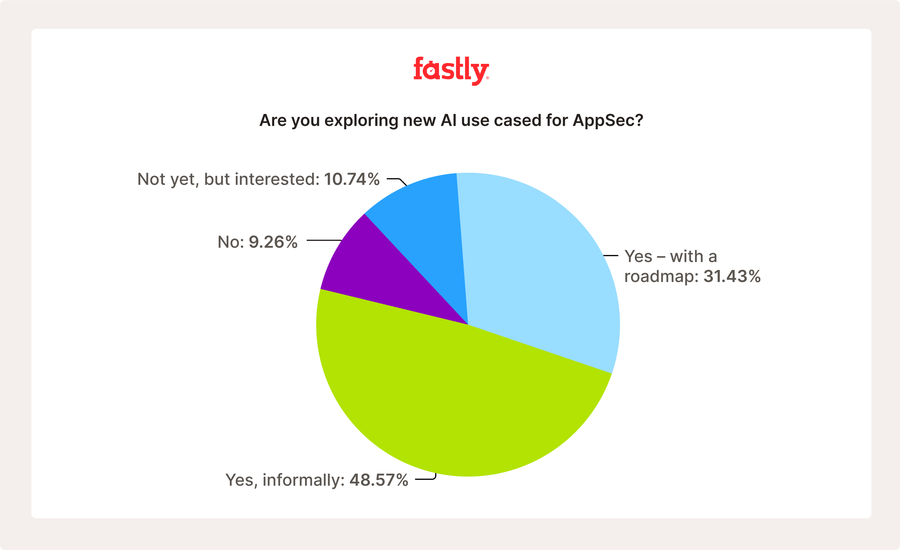

回答者に、AppSec プログラム内で AI の新しいユースケースや将来のユースケースを検討しているかどうかを尋ねました。49% が非公式ではあるが、検討していると報告し、31% は正式なロードマップとともに検討していると回答しました。合計 20% が、まだ検討検していない、または近々検討したいと回答しています。これは、合計で 80% が将来 AI の新たなユースケースや追加のユースケースを検討していることを意味します。回答者は、「セキュリティをより正確かつ効率的にするために、AI 駆動型の脅威モデリングと自動コードレビューを試してみたい」、「自動修復、予測モデリング、AI コードレビューを実施したい」と回答しています。

調査方法

この調査は、Fastly が2025年8月20日から9月3日にかけて1,015人の専門家を対象に実施しました。回答者全員が、自分の役割の一環として、アプリケーションセキュリティの購入と戦略の決定に影響を与えたり、決定を下したりする責任があることを確認しました。調査は北米、南米、ヨーロッパ、中東、アジアで実施されました。結果は正確性を保つために品質管理されていますが、すべての自己申告データと同様に、ある程度の偏りが生じる可能性があります。