When building real-time services with Fastly Compute, Fanout is the key to unlocking experiences like Server-Sent Events, HTTP streaming, and WebSockets-over-HTTP, without breaking the request/response model that makes Compute so powerful.

Until now, building real-time features for Fastly Compute posed some challenges during development, as there wasn’t a straightforward way to test your Fanout integration locally.

But the days of deploying to a real service just to find out if things worked are over: say hello to local Fanout testing!

Fanout is a powerful component of the Fastly platform that allows your application to offload long-lived connections and real-time delivery to a specialized message-broker service that speaks HTTP, making it ideal for Fastly’s event-driven architecture.

Fanout enables use cases like:

Live chat

Multiplayer game synchronization

Real-time dashboards and stock tickers

Collaborative editing tools

WebSocket-style interactivity—without the complexity of WebSockets

To use it, you write an application for Fastly Compute, and in your application’s handler you perform a “Fanout handoff” and specify a backend. Fanout does the hard part of hanging on to the long-lived connection at the edge, while your backend uses HTTP to control the connection and send messages over it.

But until now, it wasn’t possible to test these features locally. If you wanted to test Fanout functionality in a Compute program, you had to deploy to a real Fastly service. This meant signing up for Fastly if you hadn’t already, as well as enabling Fanout on your service. That was the only way to execute a Fanout handoff and see it work end-to-end.

As someone building with Fanout internally at Fastly, I’ve felt this friction point firsthand. When working on a proof-of-concept or early-stage prototype, you don’t always want to spin up production infrastructure or worry about usage metering. You just want to iterate locally and validate behavior.

And now, you can.

Introducing: Local Fanout testing

With the latest updates to the local testing server (known as Viceroy) and the Fastly CLI, you can now test real-time Compute services locally, including the Fanout handoff.

This unlocks the full local development loop:

Client connects to the local testing server (simulates the edge)

Compute program runs your custom logic, which includes a Fanout handoff

Connection is “handed off” to a local Pushpin instance

Pushpin maintains the connection and delivers messages

Your backend (also running locally) publishes real-time updates

All on your own machine.

Pushpin: The real-time bridge

Open source and maintained by Fastly, Pushpin is a proxy server project that acts as a real-time message broker. It’s the core engine behind Fastly Fanout, holding long-lived connections open and forwarding messages when your backend publishes them.

To test Fanout with Fastly Compute locally, you’ll need a local installation of Pushpin. Installation differs by OS, so refer to the Pushpin installation instructions for details.

Local architecture

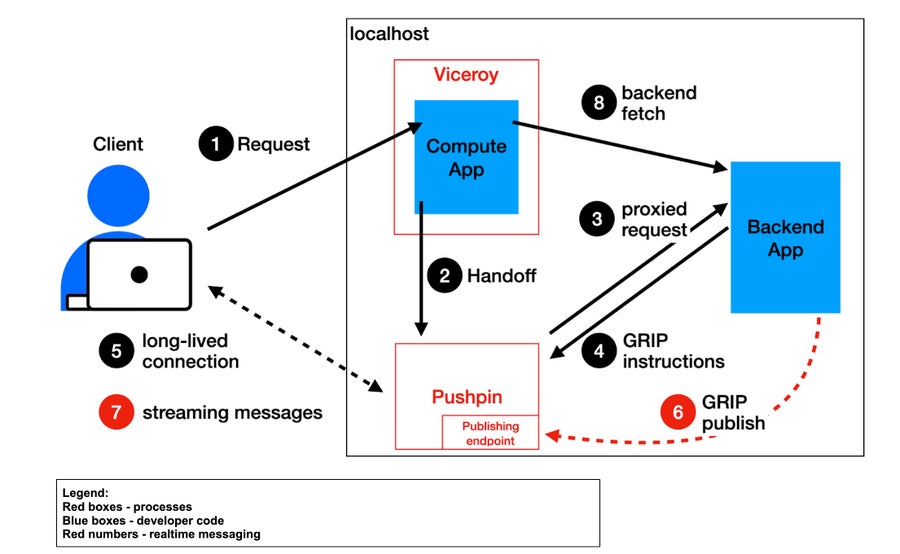

With Pushpin available, everything connects together during local development, allowing for true, local testing of Fanout features in the context of a real Compute program—including edge logic, branching paths, and full end-to-end behavior:

Local Fanout test architecture: When running fastly compute serve with Fanout testing enabled, an instance of Pushpin is automatically configured and started up alongside Viceroy based on the backends defined in fastly.toml, and Viceroy is able to perform a “Fanout handoff” to this local Pushpin instance.

Client makes an HTTP request to your Compute App running in Viceroy (1)

The Compute App performs a “Fanout handoff”, specifying a backend (2)

The diagram only includes one backend application, but multiple are supported: simply list them in

fastly.toml.

Pushpin proxies the request to the backend (3)

The backend responds with GRIP instructions, including a channel name (4)

A long-lived connection is established between Pushpin and the client, subscribed to the channel (5)

For ease of visualization, the long-lived connection in the diagram is illustrated as being between the client and Pushpin. In actuality, this virtual connection is also tunneled through Viceroy.

Publishing messages locally: To simulate real-time messaging, Pushpin needs to receive a POST to its publishing endpoint, which is available by default at:

http://localhost:5561/publish/You can send messages here from a backend service running locally.

👉 Why local? Because the publishing endpoint lives at http://localhost:5561/publish/. If your backend is remote, it won’t be able to reach this local URL

With this in place, you can now test the full real-time loop locally.

Your backend sends a publish message to Pushpin (6)

Pushpin delivers it instantly to the client (7)

It’s the same Fanout publishing flow as in production, just local.

👉 See the API reference for the publishing message format and examples.

Non-Fanout requests: The Compute App can have code paths where it chooses to handle the request without performing a “Fanout handoff”. In this case, the behavior is the same as a traditional Compute App:

Client makes an HTTP request to your Compute App running in Viceroy (1)

Compute App builds a standard HTTP response

May include standard backend fetches (8)

Compute App sends standard short-lived response over request connection (1).

How to get started

To work with Fanout testing locally, you’ll need the following:

Make sure you’re using the latest Fastly CLI.

Make sure you have Pushpin installed locally.

The simplest way to get started is to check out the Compute Starter Kits for Fanout that we provide for each of our supported programming languages. They have all been updated for local Fanout testing, and work right out of the box.

To enable Pushpin-powered Fanout testing locally for your existing application:

In your

fastly.toml, add:

[local_server.pushpin]

enable = true2. Configure your backend application locally:

Make sure your

fastly.tomlrefers to it as one of its backends.Make sure your backend application is configured to publish GRIP messages at

http://localhost:5561/publish/.

3. Start your backend application.

4. Run your Compute service with:

fastly compute serveThe CLI will:

Launch Pushpin

Configure routes based on your

fastly.tomlbackend definitionsStart Viceroy in Fanout-proxying mode

You can customize Pushpin’s ports via the local_server.pushpin section if needed.

👉 The Fanout documentation has been updated with full setup instructions, publishing examples, and test patterns.

Got questions?

We’re excited to bring full local testing to Fanout. We built this based on real developer needs—including our own. I’ve already used this setup on several projects internally, and I’d love to hear how you’re using it too.

If you have any questions or want to share what you’re building, come join the conversation in the Fastly Community.