We at Fastly have a credo: “latency kills.” Slow is never optimal when it comes to the internets, and in previous posts, we’ve alluded to the importance of caching in our ongoing quest for blazing speed. Today, we'll dig in and explore how caching helps speed up applications and lighten server load, delivering a fast, totally sweet experience for your end user.

Set your phasers

A cache holds temporary copies of data that your end users would’ve otherwise gotten directly from your origin servers. For the purpose of optimization (read: time and money), we want to spare the data source; hitting it as infrequently as possible is the name of the game.

Fastly under the hood

When Fastly is placed in front of a server, it caches items as they are requested by users - things ranging from APIs, ESIs, HTML, CSS and audio to video, Javascript, and small objects. This means we can cache your entire site, including static content (like images) and dynamic content (like breaking headlines or user comments on a popular news article).

Serving items on request in this manner eliminates the need to pre-fill or “warm” the cache. This process, known as a reverse proxy, is largely accomplished at Fastly via an open-source caching software called Varnish. It's what allows us to unburden your origin servers by listening for changes and immediately caching (and later serving) updated content.

Managing the cache

The cache is a double-edged sword: fetching copies of objects from cache is far faster than fetching them from the origin servers. It’s incredibly unlikely, however, that these objects will remain static. Clearing stale content from any cache is crucial; it doesn’t matter how fast content gets to the user if it's outdated. Fastly helps to manage the cache so you're always serving the end user fresh content.

By setting certain HTTP headers, you can control the length of time an object is held in the cache, as well as set logical groups by which objects can be purged. Examples of these headers include Cache-Control, Etag, Max-Age, Date, Last-Modified, and Expires. Note that the names of these headers tend to correspond with their function. Expires, for instance, is a timestamp that tells you when a response is considered stale. These headers act as a two-for-one, telling both Fastly and the browser which bits of content to hold onto, and which bits to send to the fiery pits of Mordor.

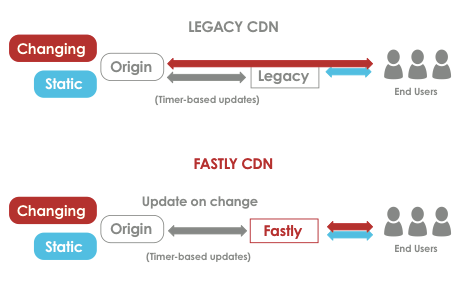

Beyond headers, there are two ways to invalidate items in the cache. The first is ASK - the cache periodically asks the origin if the cached object is still valid, and the origin replies “YES” - or “NO,” in which case it sends the revised object. The difficulty with this method is determining an appropriate frequency, which is set by the TTL value (Time To Live). A short TTL means lots of ‘Still Valid?’ – ‘Yes.’ exchanges between the cache and your origin - and increased server loads lead to higher costs. A long TTL means an invalid object could potentially be delivered to the end user. Alternatively, you can just let the origin tell Fastly when it changes an object. This is much faster and more cost effective, as it eliminates useless exchanges between the cache and the origin. Fastly can purge individual items, services, URLs, or objects by key.

The takeaway

With Fastly, you can painlessly implement and manage your cache to reduce latency and cut backend costs. Caching can speed up your application and spare your servers.

For more on the benefits of caching, check out Artur's recent Surge talk about latency.