A cache is a location that temporarily stores data for faster retrieval when something needs access to it. Caching refers to the process of storing this data. You may also have heard the term cache used in non-technical contexts, as a place to store something for convenience. For example, campers and hikers may cache a food supply along a trail. But let’s take a closer look at what caching means online.

In a perfect world, a user visiting your website would have all the data assets needed to quickly render the site stored locally. However, the assets are located elsewhere, perhaps in a data center or cloud service, and there is a cost to retrieving them. While the user pays in latency - the time required to generate and load the assets - you pay a monetary cost. The fees include not only the cost of hosting your content, but also cover what are known as egress costs; the charge for moving that content out of storage when requested by visitors. The more traffic your website receives, the more you have to pay to deliver content in response to requests, many of which can be duplicates.

This is where caching comes in, it lets you store copies of your content to speed up its delivery while expending fewer resources.

How caching works

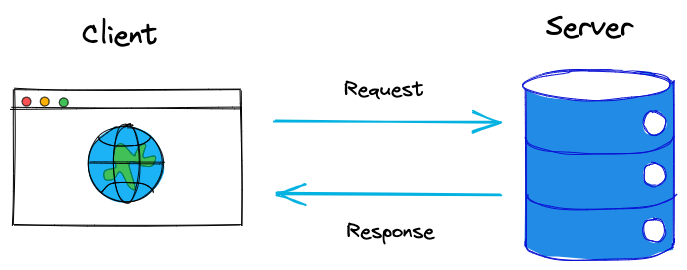

To understand how caching works, we first need to understand how HTTP requests and responses work. When a user clicks on a link or enters the URL for your website in their address bar, a request is sent from their browser (the client) to the location where the content is located (your origin server). The origin server then processes the request and sends a response to the client.

While it may seem like this happens instantaneously, in reality, it takes time for the origin server to process the request, generate the response, and send it to the client. Additionally, many different assets are required to render your website, which means multiple requests must be sent for all the different data that make up your site, including images, HTML web pages, and CSS files.

At this point, the user’s local cache enters the picture. Their browser can cache some static assets, like the header image with your logo and the site's stylesheet, on their device to help improve performance the next time they access your site. However, this type of cache is only helpful to that one user.

If you want to cache assets for every user visiting your site, you should consider a remote cache like a reverse proxy.

What are the different kinds of caching?

There are several types of caching employed at various levels of the internet infrastructure, each serving a specific purpose. Let’s explore the different kinds of caching and see how they contribute to a smoother browsing experience.

1. CDN caching

CDN (Content Delivery Network) caching reduces latency by allowing you to deliver your content from a location closer to the user, significantly improving load times. It works by storing copies of content on edge servers that are distributed around the globe. Whenever a user requests content, the CDN delivers it from the nearest edge server rather than waiting for your origin server to respond. This decreases the workload on the origin server and supports higher traffic volume by distributing requests across multiple servers.

2. Server-side caching

Unlike CDN caching, server-side caching stores ready-to-use copies of data on the origin server, preventing the user’s browser from having to freshly request each piece of content every time they load a page. This ability to store reusable data objects minimizes the number of database queries and enhances your site’s performance. Server-side caching comes in several forms, including:

Page caching: Retains a copy of HTML pages

Object caching: Keeps reusable data objects

Opcode caching: Stores precompiled PHP code

Database caching: Caches database query results

Server-side caching is also used for more than just content, with data copies ranging from full web pages to individual database queries.

3. DNS caching

DNS (Domain Name System) resolution is the process web browsers use to translate a domain name, such as www.Fastly.com, into the specific IP address of the origin server hosting the website. Web browsers use DNS caching to increase the speed of this process, as it stores DNS query results locally, enabling requests to be resolved faster on future visits. Essentially, before checking the global DNS servers, your browser checks its own DNS cache to see if it already knows the correct IP address. This significantly reduces the time needed to resolve domain names, speeding up website access.

Unlike the previous two types of caching we’ve covered, DNS caching is localized to users, it’s not server-based. It is also specifically used for resolving domain names, instead of delivering content or streamlining database queries.

4. Content caching

Content Caching is a more general caching category that refers to the duplication and storage of multiple kinds of web content to serve users more efficiently. Content caching occurs at the server level (as with server-side caching and CDN caching). However, it can occur at various other levels, including:

Browser caching: Stores web content locally on the user’s device, to enable faster re-load when the site is accessed later.

Proxy caching: Web content is stored on intermediary servers (proxies) that sit between the user and the origin server on the network.

Gateway caching: Stores a copy of data at gateways within an organization’s network.

Content caching is highly variable, occurring at multiple levels and covering a wide range of content types, including images, videos, and full HTML pages. By making copies of content more easily accessible, content caching provides a faster and smoother browsing experience for users, enabling sites to load more efficiently and reducing overall bandwidth usage on the network.

CDN and caching

As caching works by keeping copies of data on hand for later use, network systems must know that those copies already exist. When the content is found in the cache, a “cache hit” occurs. Cache hits automatically trigger the content to be delivered directly from the edge server to the user, resulting in faster load times. A “cache hit ratio” refers to the percentage of times a query results in a cache hit.

CDNs typically have higher cache hit rates than other server-side caching systems. This is because they deploy numerous edge servers globally, strategically positioned close to end-users. Because of the large user base CDNs typically serve, there is always a high likelihood that a user in a similar geographic region has already caused a copy of the data to be stored in that region’s edge server.

However, a cache hit is not always the result of a user request. There are times when the server is unable to deliver the content from the edge and must, therefore, retrieve it from the origin server. This is called a “cache miss” and can occur for various reasons:

First-time request (cold cache)

Cache invalidation or purging

Capacity limitations

Content updates

A cache miss also occurs due to expired content. Content is cached on a CDN according to a Time-to-Live (TTL) value. When that time value expires, the content is deleted from the cache. Developers and network engineers set TTL values depending on the type of content being cached. Dynamic content, like news articles, tend to have a short TTL value of a few seconds to minutes. By contrast, CDN caches tend to have longer TTL values of days to weeks, because the content, such as images, videos, CSS, and JavaScript data, is static.

Should you clear your cache?

Clearing your cache can be beneficial in certain situations, but it's not always necessary or recommended. Below, we’ve highlighted some of the most common reasons why you should clear your cache.

Free up storage

Locally, cached data can accumulate over time and take up too much storage space on your system. For example, this can be especially problematic for personal devices like laptops or netbooks that have limited storage capacity. By clearing the cache, you can often free up valuable storage space, helping to make your device run smoother.

Troubleshooting performance issues

Cached data can become corrupted or outdated over time. This can cause websites to load more slowly or glitch out, preventing you from accessing key functions. You can often resolve these issues and remove the corrupted or outdated files by clearing your cache.

Improve privacy

Cached data can include sensitive information such as login credentials, browsing history, or other private data. Clearing your cache is a quick way to protect this private information by removing it from your system.

However, there are also situations where clearing your cache may not be necessary or advisable.

1. Loss of data

When a user clears their cache, they delete the data, which helps websites and applications load faster. The drawback with cache clearing is that websites and applications will need to reload these resources from scratch. This can lead to slower loading times until the cache is rebuilt.

2. Temporary fix

Clearing a cache can resolve certain performance issues, but the fix is often only temporary. If the underlying issue is not resolved, the performance problems may crop up again once the cache is rebuilt. It’s critical to identify and address the root cause to achieve a long-term solution.

3. Loss of performance benefits

One of the primary benefits of caching is improved performance. Removing the data in your cache leads to a loss of this added value.

How does Fastly use caching?

Fastly employs caching in its CDN to speed up the delivery of your content to your audience. By storing exact copies of your content on our servers around the world, we reduce latency and server load to ensure your users get the fastest access from the closest server.

Fastly's caching features include Instant Purge™ for rapid content updates and customizable caching rules. Our approach upgrades the user experience with faster access to content while also reducing bandwidth and egress costs.