Authentication is scary, difficult, dangerous, and… essential. Most apps need it, so it's a precondition on almost all end-user requests. The perfect web app authentication would be close to the end-user, it would be isolated from the rest of the system, it would be implemented and maintained by security professionals, and easy to integrate.

That sounds to us a lot like OAuth implemented in Compute@Edge on Fastly — fast, distributed, secure, and autonomous. Let's explore how to do it.

First things first: Authentication is proving who I am, and is distinct from authorization, which is what I am allowed to do. First we'll focus on how to establish the user's identity. By far the most common way to do this is using OAuth 2.0 and OpenID Connect.

When requests arrive at the edge, we need to be able to separate those that can be associated with a specific user from those which are anonymous or invalid. The anonymous requests are directed through an OAuth authorization code flow, and the invalid requests are rejected. The result is that only requests from authenticated users are allowed to proceed to the application origin server.

Here's the flow we're going to create in more detail:

Let's run through this:

The user makes a request for a protected resource, but they have no session cookie.

At the edge, Fastly generates:

A unique and non-guessable state parameter, which encodes what the user was trying to do (load

/articles/kittens).A cryptographically random string called a code verifier.

A code challenge, derived from the code verifier.

A time-limited token, authenticated using a secret, that encodes the state and a nonce (a unique value used to mitigate replay attacks).

We store (a) & (b) in cookies so we can get them back later. We include (c) & (d) in the next request to the authorization server.

Fastly builds an authorization URL and redirects the user to the authorization server operated by the identity provider.

The user completes login formalities with the identity provider directly. Fastly is not involved in this until we receive a request to a callback URL with the results of the login process. The IdP will include an authorization code and a state (which should match the time-limited token we created earlier) in that post-login callback.

The edge service authenticates the state token returned by the IdP, and verifies that the state we stored matches its subject claim.

Fastly connects directly to the identity provider and exchanges the authorization code (which is good for only one use) and code verifier for security tokens:

An access token – a key that represents the authorization to perform specific operations on behalf of the user)

An ID token, which contains the user's profile information.

Fastly redirects the end-user to the original request URL (

/articles/kittens), along with their security tokens stored in cookies.When the user makes the redirected request (or indeed, any subsequent request accompanied by security tokens), Fastly verifies the integrity, validity and claims for both tokens. If the tokens are still good, we proxy the request to your origin.

Before you start building this, you're going to need an identity provider.

Getting an identity provider (IdP)

You might operate your own identity service, but any OAuth 2.0, OpenID Connect (OIDC) conformant provider will do. Follow these steps to get the IdP set up:

Register your application with the identity provider. Make a note of the

client_idand the address of the authorization server.Save a local copy of the OpenID Connect discovery metadata associated with the server. You’ll find this at

/.well-known/openid-configurationon the authorization server’s domain. For example, here's the one provided by Google.Save a local copy of the JSON Web Key Set (JWKS) metadata. You’ll find this under the

jwks_uriproperty in the discovery metadata document you just downloaded.

Now, create a Compute@Edge service on Fastly that will talk to the IdP.

Create the Compute@Edge service

We've put everything you need for this project into a repository on GitHub, but you still need to have a Fastly account that is enabled for Compute@Edge services. If you haven't already, follow the Compute@Edge welcome guide which will install the fastly CLI and the Rust toolchain onto your local machine. Now we can start coding!

Clone the repository:

git clone https://github.com/fastly/compute-rust-authFollow the instructions in the README.

Congratulations, you've deployed a Compute@Edge service! To complete the integration, your identity provider needs to know to direct users to the Compute@Edge service when they finish logging in.

Link the identity provider

Add https://{some-funky-words}.edgecompute.app/callback to the list of allowed callback URLs in your identity provider’s app configuration. This allows the authorization server to send the user back to your Fastly-hosted website.

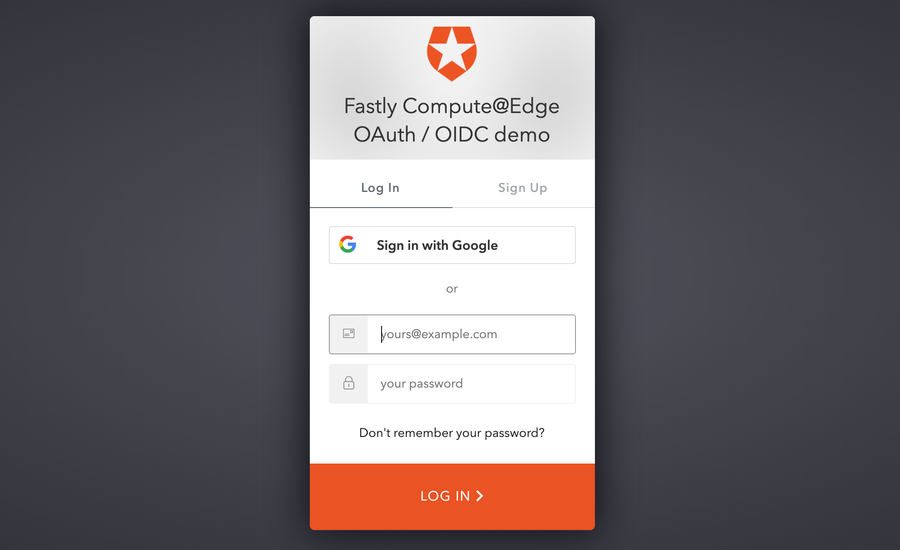

Open your app in a browser and watch the magic happen!

For the purposes of the sample app, any path you try to visit will require authentication. If you are already authenticated, Fastly will proxy the request to your origin, as normal. There's more ambitious stuff you can do here, which we'll talk about in a follow-up blog post. For now, let's look at how this basic, minimal integration works.

How this all plugs into Compute@Edge

Compute@Edge programs written in Rust using the Fastly Rust SDK have a main function which is the entry point for requests. Normally, the main function receives a Request struct and returns a Response struct.

The first thing we do in main is to check if we're dealing with a callback path, indicating that the user is returning from the identity provider and is ready to initialize a session. This path always needs to be intercepted and will never be proxied to the backend.

if req.get_url_str().starts_with(&redirect_uri) {

// ... snip: Validate state and code_verifier ...

// ... snip: Check authorization code with identity provider ...

// ... snip: Identity provider returns access & ID tokens ...

Ok(responses::temporary_redirect(

original_req,

cookies::session("access_token", &auth.access_token),

cookies::session("id_token", &auth.id_token),

cookies::expired("code_verifier"),

cookies::expired("state"),

))

}A satisfactory response from the identity provider will consume the authorization code and proof key – remember the code verifier and challenge? – and provide in return an access_token and id_token:

The access token is a bearer token, implying that its “bearer” is authorized to act on behalf of the user to access authorized resources.

An ID token is a JSON Web Token (JWT) that encodes identity information about the user.

We’ll use these to authenticate future requests, so we’ll store them in cookies, do away with any intermediary cookies we used for the authentication process – remember the state parameter and code verifier? – and redirect the user back to the URL they originally wanted.

If we know that the user is not on the callback path, then we check for cookies that accompany the request so we can determine whether there is an active session (defined by the access token and ID token):

let cookie = cookies::parse(req.get_header_str("cookie").unwrap_or(""));

if let (Some(access_token), Some(id_token)) = (cookie.get("access_token"), cookie.get("id_token")) {

// snip: ... validation logic ...

req.set_header("access-token", access_token);

req.set_header("id-token", id_token);

return Ok(req.send("backend")?);

}If the session exists and is valid, req.send() dispatches the request upstream to your own origin, and returns a Response to send downstream to the client. Our example service sets the access token and ID token into custom HTTP headers to the origin. You could do something different here depending on how you plan on using identity at the origin — more on that in a minute!

Lastly, if the user is neither authenticated nor in the process of logging in, we start the process by sending them to the identity provider to sign in:

let authorize_req =

Request::get(settings.openid_configuration.authorization_endpoint)

.with_query(&AuthCodePayload {

client_id: &settings.config.client_id,

code_challenge: &pkce.code_challenge,

code_challenge_method: &settings.config.code_challenge_method,

redirect_uri: &redirect_uri,

response_type: "code",

scope: &settings.config.scope,

state: &state_and_nonce,

nonce: &nonce,

})

.unwrap();

Ok(responses::temporary_redirect(

authorize_req.get_url_str(),

cookies::expired("access_token"),

cookies::expired("id_token"),

cookies::session("code_verifier", &pkce.code_verifier),

cookies::session("state", &state),

))We're using Request::get here to build a request, but instead of sending it, we extract the serialized URL and redirect the user to it. We also save the state parameter and code verifier so we can check them later, and delete any access and ID tokens, because — if they exist — our earlier code obviously didn't approve of them!

These are the three scenarios in which our authentication solution interacts with incoming requests.

Wrapping up

The basic concept of performing authentication and then using identity data to make authorization decisions is something that applies to a large majority of web and native apps. Doing this at the edge can offer some very significant advantages to both developers and end users:

Better security, because the authentication process is a single universal implementation which applies across all backend apps

More maintainable, because components are decoupled.

Privacy aware, because we keep user data where it needs to be and share the minimum amount of data with origin apps

Faster performance, because at the edge we can cache content that requires authentication to access, and requests relating to the authentication process are answered at the edge directly

This is just one of many possible use cases for edge computing, and a good example of starting to shift state away from core infrastructure. Much of the problem of scaling distributed systems comes down to making state more ephemeral, or more asynchronous. Andrew recently shared more ideas about edge native apps.

We're excited to see what else people make with Compute@Edge!