If you’ll be in Las Vegas for the Black Hat 2025 conference, don’t miss your chance to get an early look at one of our newest security innovations. It’s all about making attackers cry.

We’re bringing more sophisticated defensive techniques to our customers’ security toolboxes while keeping things as simple to use as ever. The Fastly Next-Gen WAF is moving beyond the conventional block/allow paradigm for web application firewalls.

We’re introducing a new action type, “Deception,” within the Next-Gen WAF that will allow our customers to deceive cyber attackers and bot operators. This helps our customers address a common attack vector, account takeover (ATO), in a new, unique way – with no setup or maintenance actions required by the customer beyond using the action!

With this new action, the attacker is left thinking their compromised credentials are invalid, not that they've been detected and traced – so they waste time and resources and question their own toolkit, and then get caught without knowing. The goal is to make the attacker so frustrated that they give up and go somewhere else, but not without first being captured.

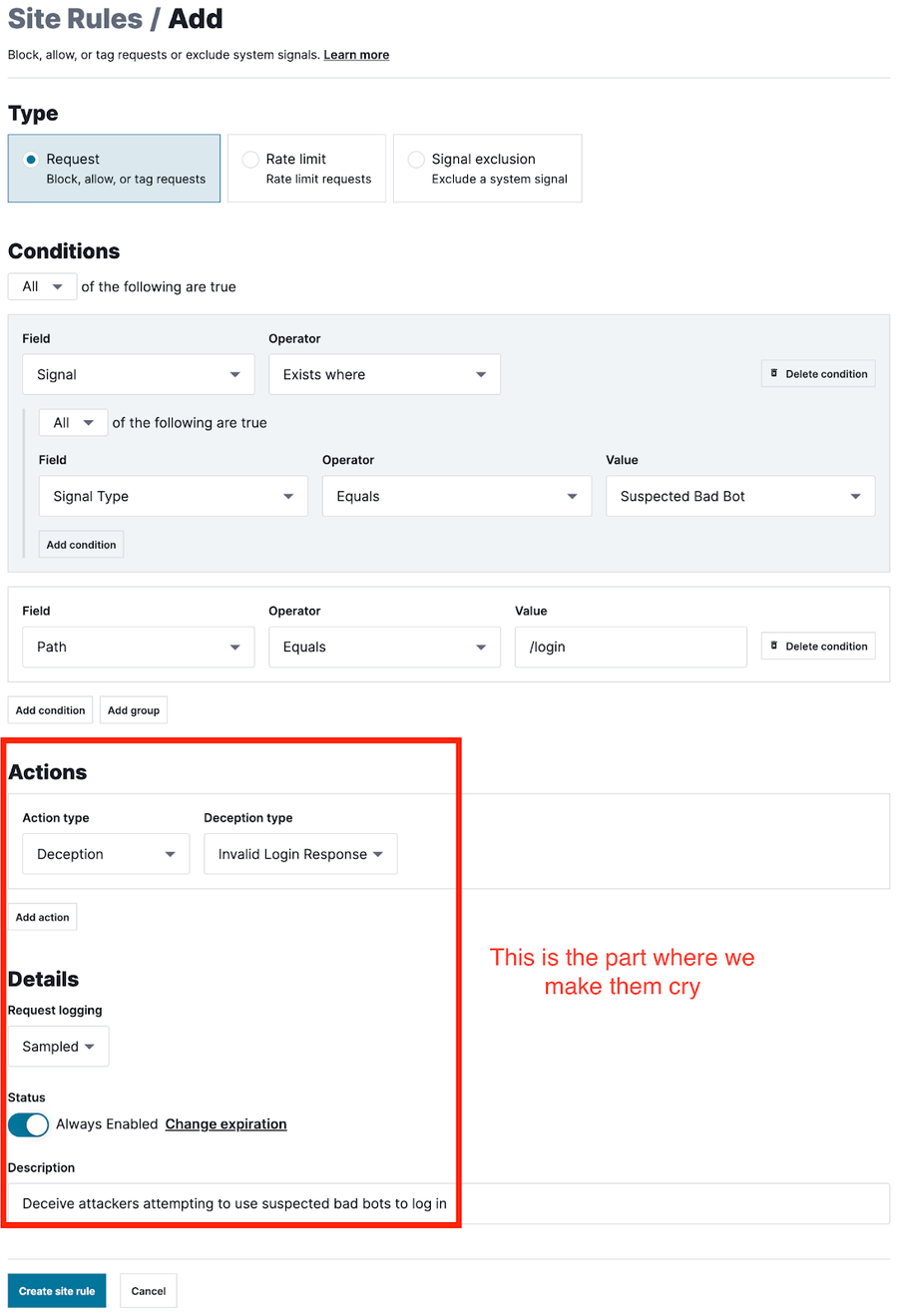

(Figure 1: Configuration)

Whenever a rule using this action is triggered, the Next-Gen WAF generates a signal indicating a deception response.

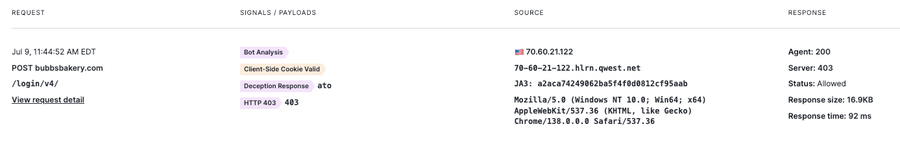

(Figure 2: Request and Signals)

This new action helps turn the tables on attackers by using their own curiosity and persistence against them. It significantly bolsters the protective posture of our WAF customers and provides a continuous feedback loop that fuels more intelligent and adaptive security measures.

First things first: what is deception?

Deception in cybersecurity is not a new concept. It has been successfully implemented in many contexts and use cases over the course of history, especially in warfare. It also borrows from common traits in the natural world like camouflage and mimicry: imagine a stick insect, or a harmless snake that has a similar banded pattern as a venomous one.

A classic example in both history and security is the Trojan horse, from which a common cyber attack vector derives its name. Deception can be very simple, like running a duplicate application server with vulnerabilities to distract an attacker from the real thing, or it can be complex, effectively gaslighting an attacker to the point where they have little idea about what is real and what is fake.

Instead of simply denying access to a resource, deception involves the subtle and strategic redirection of potential attackers with carefully crafted misinformation or simulated behaviors. Deception introduces confusion, friction, and frustration for cyberattackers, which often leads them to stop their attacks and move on to easier targets. It can also lull attackers into a false sense of security, which prompts them to reveal actionable intelligence through their observed behaviors within a deceptive system.

While some might think of “deceptive” practices as morally questionable or even antithetical to their organizational values, research has consistently proven that these techniques are effective and can be deployed in a responsible manner as a layer of “noise” to stop attackers from succeeding in their own unethical goals. In other words: there’s never been a better time to fight fire with fire.

The problem: attackers aren’t stupid

Many people think of deception in the context of honeypot infrastructure, which involves creating a duplicate version of a system for attackers to target instead of the real one. This might mean spinning up extra instances of application servers, or even running an entire parallel network infrastructure. These techniques have been used for decades and can be very effective, but they are resource-intensive, complex to manage, and risky, too.

Another form of deception might involve “pretending your application is more secure than it is” – imagine installing a fake security camera outside your door, or birds that puff up their feathers to make themselves seem larger in the face of a threat. These methods are well established and field-tested (especially by the birds!)

But what’s the problem with a tried-and-true method? Exactly what you might think. Attackers are smart – they know all about honeypots and other traditional forms of deception, and they’re on the lookout for these types of tactics. They know that the security camera above your door is probably fake.

The solution: make attackers cry

To keep up with evolving attack methods, deception needs to evolve too. Understanding attacker behavior starts with understanding how humans learn, behave, and make decisions. Since attackers are human and automated attacks are designed and launched by humans, we can apply principles learned from the ways humans behave to fight back.

Let’s go back to the web application firewall example. Traditional WAF blocking is a game of cat and mouse – an attacker attempts to break in, and the WAF detects the request and blocks it. When a request is blocked by the WAF, the attacker knows they've been caught and can immediately start retooling their methods to evade any defenses. Deception changes the rules of that game by making it more confusing and difficult to adapt.

Now let’s look at deception in the Next-Gen WAF. Instead of being blocked during an account takeover attack, the attacker simply thinks their stolen credentials don't work – which confuses and frustrates them, since they probably paid real money for those credentials and expected them to work. But really, they’ve been denied access, detected and outed as an attacker, and tricked, and they have no idea. This bamboozle makes attackers doubt their own methods and wastes their resources, and hopefully encourages them to leave and try somewhere else.

But more importantly, this tactic exerts a real mental load on the attacker, which has the understandable consequence of making them cranky. Put another way, slowing attackers raises the opportunity cost for attackers. Attacks have real infrastructure and labor costs associated with them in terms of choosing targets, launching the attack, conducting operations, and determining the appropriate time to end the campaign. Adding extra friction to this process costs attackers real money, time, and effort – and deploying deception strategically to make attackers cry can directly influence their behaviors.

Cyber crime is a business with a P&L like any other. By introducing friction, uncertainty, and wasted effort, our Deception action directly attacks their bottom line. It turns their expected ROI into a net loss, making your organization an unprofitable target.

Going deeper into deception

How sophisticated can this get? The possibilities are endless. Deception can be used to create what are known as “deception environments” – sending that attacker into a funhouse full of mirrors so complex and sophisticated that they aren’t even sure which way is up, or if they can trust their own judgment.

These are isolated replica environments that contain fully functioning, complete systems that are designed and built entirely to attract, mislead, and observe attackers. By tracing attacker behavior within them, deception environments can unlock “attack observability.” Similar to how traditional observability methods involve understanding how systems and applications actually behave versus the baseline of how they “should” behave, attack observability helps system owners validate their beliefs about attacker behavior with real data and insights, both to make real-time decisions and to better improve system designs and defenses to prevent malicious behaviors.

Deception can provide profound insights into current and evolving attack methodologies. As attackers interact with a deceptive environment, their actions, techniques, and objectives are meticulously recorded and analyzed without exposing actual vulnerabilities or introducing risk. This intelligence can help system owners understand adversary tactics, proactively strengthen their defenses, and develop more robust strategies for defense.

Simply put: if an attacker doesn’t know that they’re being deceived, they will behave in ways that can expose useful insights in terms of current and future attacks being staged, launched, and adjusted in real time.

“By harnessing modern infrastructure and systems design expertise, software engineering teams can use deception tactics that are largely inaccessible to security specialists. Engineering teams are now poised — with support from advancements in computing — to become significantly more successful owners of deception systems” (Shortridge & Petrich, 2021)

Get ready to bamboozle

We’re thrilled to introduce deception capabilities to the Fastly Next-Gen WAF, making them easy and straightforward for our customers to implement within the product and rules they are already building and using today. Future releases will expand deception capabilities to other attack types and into other Fastly security products.

Our vision is to embed deception into all of our new and existing security products to create end-to-end attacker tracing across your production environments. We’ll help you make attackers feel insecure and give them a taste of their own medicine.

Fastly is heading to Black Hat USA 2025 at booth #2661 — one of the biggest stages for security innovation and insights. Join us August 6–7 as we showcase how Fastly’s edge cloud platform and Next-Gen WAF are helping organizations stay fast, secure, and resilient.

Not able to make it to Black Hat this year? Don’t fret! Chat with our team of security experts for a personalized demo to see what Fastly can do for you.