Friday night’s boxing match was set to be a marquee event, but for many fans, the real fight wasn’t in the ring—it was with the stream. Buffering issues and dropped connections left viewers frustrated, their disappointment echoing louder than the excitement of the main event. Moments like these remind us that delivering under pressure is never easy—we’ve faced our own challenges along the way. Each setback has been a profound teacher, shaping and refining how we think about live event streaming and pushing us to innovate further. With each lesson, we move closer to creating experiences that fans everywhere can count on, no matter the moment.

The surge of simultaneous viewers for live events places immense pressure on platforms, requiring architecture that can handle real-time scalability, low latency, and unpredictable spikes. It’s a complex and unforgiving task, and even the most prepared teams can face obstacles.

What happened to Netflix on Friday night is a challenge no platform wants to face, and we empathize deeply with their team. Disruptions are a tough reality of our industry, and while the specific cause remains unclear, it highlights a broader truth: many things can go wrong during these large events. And while the best-laid plans can often go awry, there are steps that we have learned over the years when dealing with surprises of large live events that need to be taken to mitigate subpar viewer experiences when an influx of traffic occurs.

In the following, discover the key lessons we've learned through our journey of planning and executing successful live stream events.

11 Essentials for a Successful Live Streaming Event

1. Request Collapsing

Request collapsing in live streaming is a technique used to manage the high volume of simultaneous requests for the same video segment that occurs as thousands or even millions of viewers watch a live event in near real-time. When multiple users request the same segment—such as a specific 2-5 second chunk of video—within a short time frame, a CDN or edge server consolidates these requests into a single request to the origin server. Once the segment is fetched and cached, it is used to serve all the collapsed requests without requiring repeated trips to the origin.

This not only reduces the load on the origin server but also ensures that viewers experience minimal latency and smooth playback. By preventing duplicate origin requests and mitigating sudden spikes in demand, request collapsing is essential for maintaining reliability and scalability in live streaming.

BONUS! Fastly Media Shield enhances our existing origin shielding and request collapsing features, extending them to multi-CDN deployments outside the Fastly network. With minimal changes to your current workflows, you can significantly reduce requests to your origin infrastructure by placing Fastly Media Shield behind your multi-CDN setup.

Fastly’s Media Shield optimizes multi-CDN setups while lowering the total cost of ownership (TCO) for your streaming services. By drastically decreasing the traffic hitting your origin, you can cut costs and reduce the amount of infrastructure needed to support your video services.

2. Precision Path

Fastly’s Precision Path continuously monitors all origin connections across every Fastly Point of Presence (PoP) worldwide. When an underperforming connection is identified, the system automatically evaluates all possible alternative paths to that origin and reroutes the connection to the most optimal path. This process happens in real-time, often allowing Fastly to restore a healthy origin connection before 5xx errors are served to end users, ensuring minimal disruption to the viewing experience.

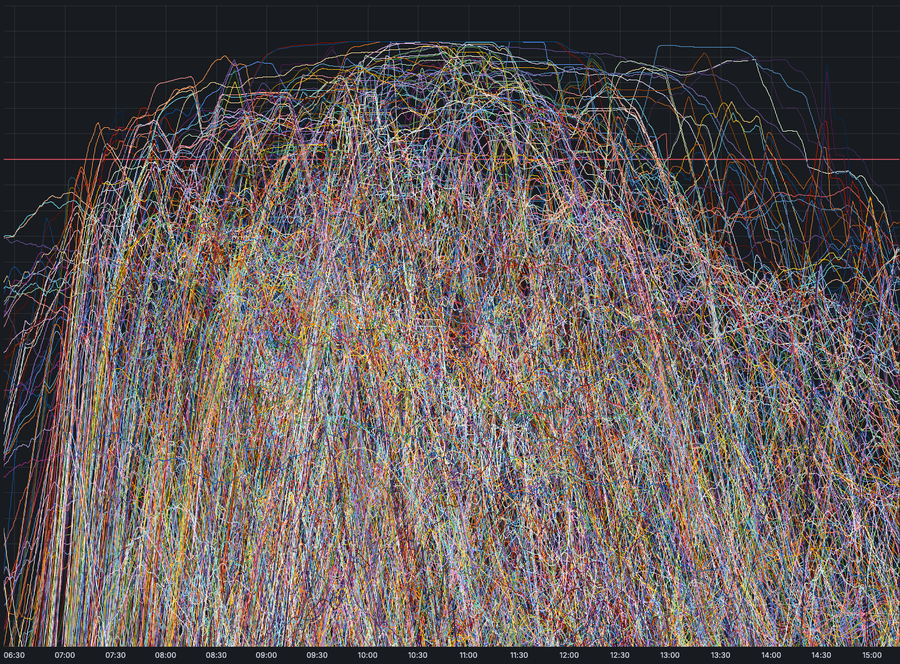

When preferred interconnect paths become congested, secondary paths ensure uninterrupted content delivery. Source: 24-hour snapshot of Fastly traffic

CDNs continuously work to expand and increase their interconnect capacity with "eyeball networks," which refer to the internet service providers (ISPs) and networks that deliver content directly to end users. This capacity expansion is a cyclical process that requires careful planning, provisioning, and infrastructure deployment, including networking equipment and fiber connections. For instance, if a CDN approaches the interconnect capacity of a major ISP, the next expansion cycle is triggered. However, these expansions are not instantaneous—they require collaboration between CDNs and ISPs to deploy the necessary equipment and upgrade infrastructure, which takes time.

In the past, capacity planning cycles were longer, often based on annual peaks such as the surge between Black Friday and the Super Bowl. CDNs could provision enough capacity to handle these peaks and then use the intervening months to plan and execute upgrades for the following year. However, with new content categories like gaming, global sports events, and patch downloads, the cadence has accelerated significantly. Quarterly or even monthly peaks are now the norm, driven by events like World Cups, and significant gaming updates, creating constant pressure to adjust and expand capacity.

3. Autopilot

Fastly’s Autopilot addresses the limitations of Precision Path by focusing on smarter decision-making rather than just speed. While Precision Path quickly moves traffic away from failed routes, Autopilot shifts larger volumes of traffic towards better-performing and less congested parts of the network.

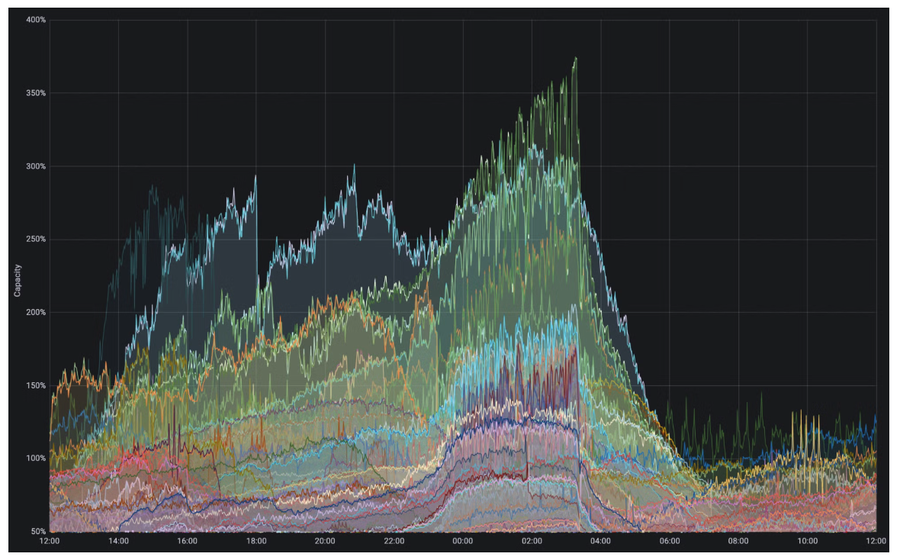

Egress interface traffic demand over capacity. Multiple interfaces had demand exceeding three times the physical capacity available during the Super Bowl 2023.

Autopilot acts as a controller that gathers network telemetry data. Data is collected in real time that is used to analyze traffic, and opportunities to reroute traffic are identified. If a link is nearing full capacity or the current path for a destination is underperforming compared to alternatives, Autopilot proactively shifts traffic to better routes.

4. Capacity management

At its core, capacity management is about strategic planning and real-time adjustments. By analyzing historical data and utilizing advanced analytics, streaming platforms can predict viewer demand and prepare accordingly. Technologies like adaptive bitrate streaming optimize bandwidth usage by adjusting video quality based on a viewer's internet speed and device capabilities.

Distributing traffic across multiple servers and data centers reduces the risk of overloading any single resource. Furthermore, failover mechanisms and edge computing enhance resilience, ensuring that streams remain available even during network disruptions or server failures. This careful orchestration of resources allows livestreaming providers to meet the demands of millions of viewers simultaneously while delivering a seamless and reliable viewing experience.

5. Prepare for a surge of organic traffic

Preparing your tech stack to handle surges in organic traffic is crucial for maintaining performance, reliability, and user satisfaction during large live streams. As your content gains traction, our infrastructure must be ready to scale and manage the increased traffic effectively. Load balancers can efficiently distribute traffic across servers, maintaining consistent performance even during peak periods.

By caching content closer to end users, latency is reduced, and the load on your origin servers is alleviated, ensuring quick load times even under heavy traffic. Monitoring tools (see below) and analytics are also essential, providing real-time insights into traffic patterns and system performance. The data lets your team anticipate potential bottlenecks and take proactive measures to ensure a seamless user experience. With the right tech stack, businesses can not only withstand traffic surges but also turn them into opportunities for growth and engagement.

6. Downstream saturation

Downstream saturation in live streaming or large events happens when a network downstream from the CDN experiences capacity constraints, causing bottlenecks that disrupt data flow. This often occurs during high-demand events as large audiences request the same stream, overwhelming local ISP infrastructure. The metro or regional capacity is particularly vulnerable, leading to buffering, delays, or reduced video quality.

To combat this, CDNs use adaptive bitrate streaming to adjust video quality in real time based on bandwidth, ensuring smooth playback. Regional caching stores content closer to users to reduce network strain.

7. Multi-tenant platform

Multi-tenant platform refers to an infrastructure that serves multiple customers using the same underlying system while maintaining logical separation between them. Businesses or organizations share Fastly’s resources, such as servers, caching nodes, and network capacity, while their data, configurations, and operations remain isolated. This design ensures security and privacy while allowing the CDN to optimize resource utilization across many users.

Fastly’s unified infrastructure means that customers using our CDN can benefit from continuous feature updates, security improvements, and performance enhancements rolled out across the platform. By leveraging a multi-tenant model, Fastly enables businesses to operate independently on a shared infrastructure while still enjoying the advanced capabilities and innovations of its edge cloud technology.

With a single-tenant infrastructure, businesses carry the risk of overbuilding or underbuilding capacity for a specific event, which can lead to inefficiencies or unmet demand. In contrast, the multi-tenant model introduces multiple inputs into capacity planning, distributing the load across numerous customers and events. This means capacity is built to accommodate diverse use cases, making it more efficient and sensible to invest in expanding infrastructure. Even if one event doesn’t reach its anticipated scale, the shared nature of the platform ensures that resources are still effectively utilized, benefiting other customers and their needs.

8. Real-time logging gives you the ability to react with moments’ notice

Our game-changing real-time logging provides exceptional visibility into your live online streams as events occur. With instant access to detailed logs, you can monitor critical metrics such as playback performance, viewer engagement, and error rates in real-time. This immediate feedback loop allows you to identify and resolve issues like buffering, latency spikes, or delivery errors before they escalate and affect your audience. In live streaming, where audience expectations are high and every second matters, having this level of insight ensures a smoother, uninterrupted viewing experience.

Furthermore, real-time visibility empowers you to make data-driven decisions on the spot. Whether you need to optimize your delivery routes, reallocate bandwidth, or quickly address problems with your origin server, real-time logging keeps you in control of your stream. This proactive approach not only enhances the reliability of your live content but also builds trust with your audience, ensuring they remain engaged with your stream rather than seeking alternatives. In the fast-paced world of live online streaming, real-time visibility is not just beneficial—it is essential.

9. Origin Connectivity

Origin connectivity refers to the communication pathway between the origin server, where live content is ingested and hosted, and the CDN that distributes this content to viewers around the globe. Strong and reliable origin connectivity is essential for delivering live streaming content efficiently to edge nodes, which then serve it to end-users with minimal latency and buffering.

This connectivity is crucial for maintaining a high-quality viewer experience during live events. Any interruptions or degradation in this connection can result in dropped frames, lag, or even complete service outages. To mitigate these risks, CDNs commonly utilize strategies such as origin shielding, redundant origin connections, and dynamic origin selection. These techniques optimize the flow of data from the origin server to edge locations, reduce bandwidth strain on the origin server, and provide failover options in case of server disruptions. In scenarios where real-time delivery is critical, ensuring robust origin connectivity is a foundational element for a successful and seamless broadcast.

10. Online Security

Although online security does not directly affect the streaming quality of large live events, its importance is significant. Malicious actors often target video streams with the intent to disrupt broadcasts and take them offline. These attacks can arise from various motives, such as political agendas or the desire for notoriety that comes with sabotaging an event watched by millions. A single security breach can undermine not only the reliability of the broadcast but also the trust of the audience and stakeholders. Therefore, proactive security measures are essential to ensure uninterrupted streaming and to protect the integrity of the event as well as the viewing experience.

11. Measure what matters – Quality of Experience

Metrics are the lifeblood of delivering a seamless online streaming experience, especially for large-scale events where every second matters.

Three key metrics stand out in importance:

Bitrate measures the quality of the video stream, determining how sharp and smooth the content looks to the viewer. A high, stable bitrate ensures that viewers enjoy crisp content, whereas fluctuations or drops can lead to pixelation and degraded quality, breaking the immersion of live events.

Rebuffering Ratio is another critical metric that indicates what percentage of viewers experience interruptions due to the video player running out of content to display. Rebuffering moments are not just frustrating—they can severely impact a viewer's perception of the event and the platform delivering it.

Video Startup Failure is a fatal flaw in streaming. This occurs when the player fails to load the content entirely, leaving the viewer stuck with a blank screen. Unlike a momentary buffering issue, VSF leaves viewers without any access to the event, which can significantly harm both reputation and viewer trust.

Optimizing for these metrics often involves sophisticated strategies, including multi-CDN architectures. When primary and secondary paths to deliver content become saturated—a not uncommon scenario during high-demand events—leveraging the collective capacity of multiple CDNs ensures the stream continues uninterrupted. These metrics not only guide technical improvements but also serve as a reflection of the viewer experience, making them essential benchmarks for success in the competitive landscape of live streaming.

Final thoughts

In the coming months, Netflix is stepping up its game in live event programming with some exciting offerings, including upcoming NFL games with, perhaps an even higher anticipated halftime show featuring Beyoncé, which is very likely to captivate a large audience worldwide.

Live streaming has become a cornerstone of modern entertainment, combining the immediacy of live events with the accessibility of on-demand platforms. The challenges Netflix faced aren’t isolated—they highlight the intricate and ever-evolving complexities of delivering live content at scale.

At Fastly, we’ve built our expertise on delivering some of the world’s largest online events. We know the stakes are high because we’ve lived them—ensuring every frame reaches audiences as intended. Live streaming is more than a technical achievement; it’s about preserving trust, excitement, and connection with fans and subscribers. It’s why we continue to innovate and refine our approach, knowing the margin for error is razor-thin and the expectations of viewers are higher than ever.